AI for DeFi Data Analysis: A Practical On-Chain Workflow

AI for DeFi Data Analysis: A Practical On-Chain Workflow is about turning transparent-but-messy blockchain activity into repeatable research: clean datasets, defensible features, testable hypotheses, and monitored models. If you’ve ever looked at TVL dashboards, yield pages, and token charts and thought “this feels hand-wavy,” this workflow is your antidote. And if you like structured, staged analysis (the way SimianX AI frames multi-step research loops), you can bring the same discipline to on-chain work so results are explainable, comparable across protocols, and easy to iterate.

Why on-chain data analysis is harder (and better) than it looks

On-chain data gives you ground truth for what happened: transfers, swaps, borrows, liquidations, staking, governance votes, and fee flows. But “ground truth” doesn’t mean “easy truth.” DeFi analysts run into problems like:

The upside is huge: when you build an AI-ready pipeline, you can answer questions with evidence, not vibes—then keep re-running the same workflow as conditions change.

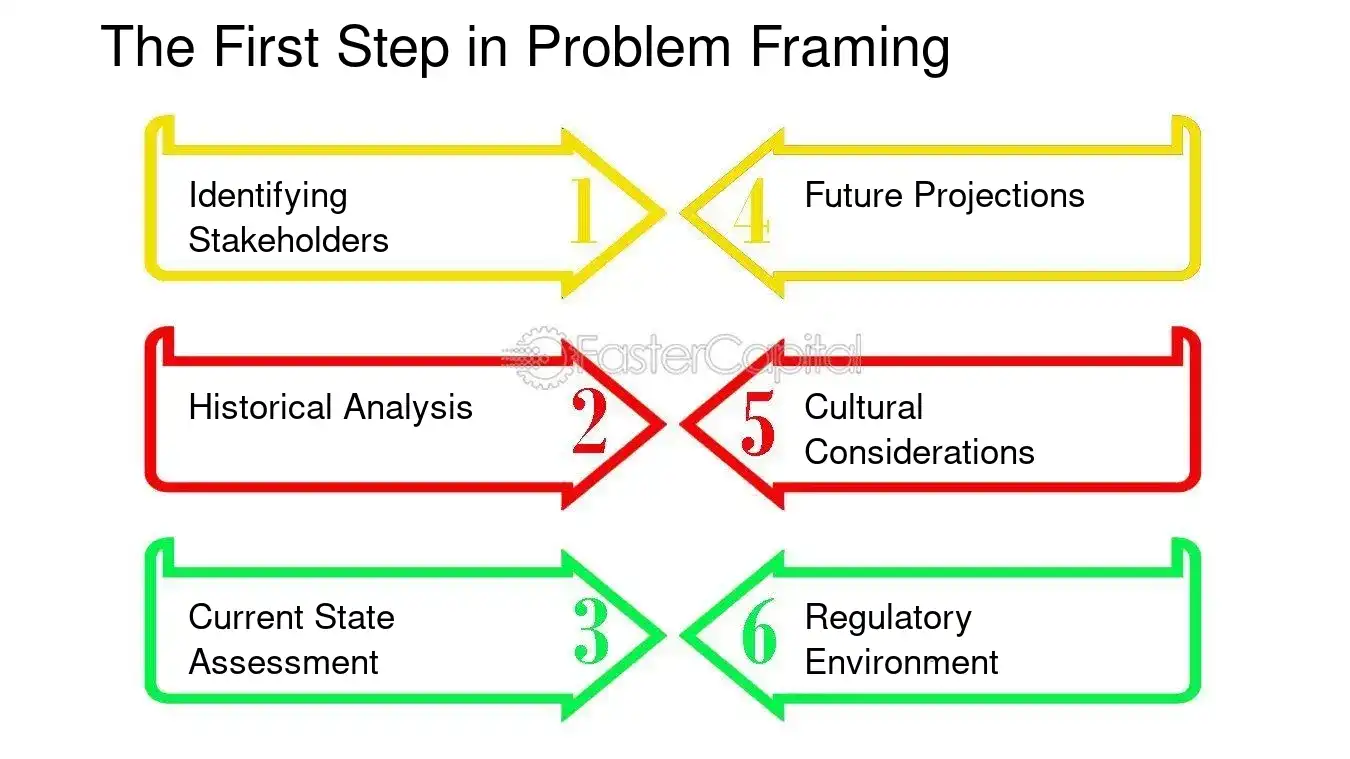

Step 0: Start with a decision, not a dataset

The fastest way to waste time in DeFi is to “download everything” and hope patterns emerge. Instead, define:

1. Decision: what will you do differently based on the analysis?

2. Object: protocol, pool, token, vault strategy, or wallet cohort?

3. Time horizon: intraday, weekly, quarterly?

4. Outcome metric: what counts as success or failure?

Example decisions that map well to AI

Key insight: AI is strongest when the target is measurable (e.g., drawdown probability, liquidation frequency, fee-to-emissions ratio), not when the target is “good narrative.”

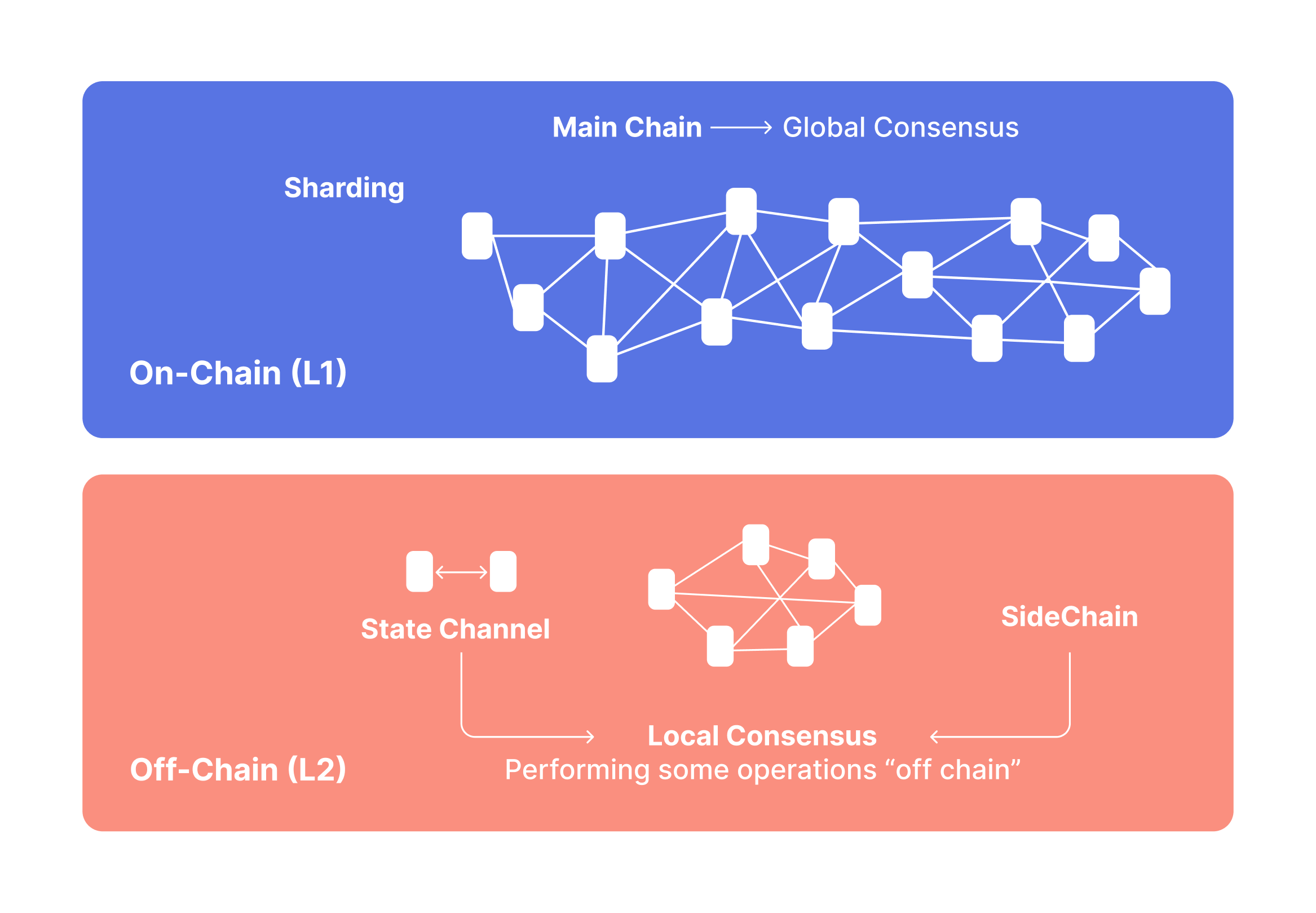

Step 1: Build your on-chain data foundation (sources + reproducibility)

A practical on-chain workflow needs two layers: raw chain truth and enriched context.

A. Raw chain truth (canonical inputs)

At minimum, plan to collect:

Pro tip: treat every dataset as a versioned snapshot:

B. Enrichment (context you’ll need for “meaning”)

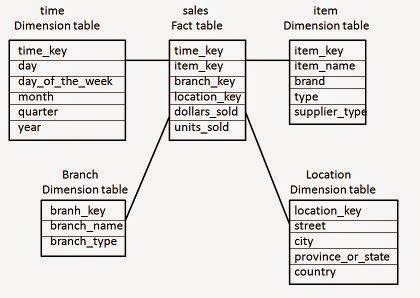

Minimal reproducible schema (what you want in your warehouse)

Think in “fact tables” and “dimensions”:

fact_swaps(chain, block_time, tx_hash, pool, token_in, token_out, amount_in, amount_out, trader, fee_paid)

fact_borrows(chain, block_time, market, borrower, asset, amount, rate_mode, health_factor)

dim_address(address, label, type, confidence, source)

dim_token(token, decimals, is_wrapped, underlying, risk_flags)

dim_pool(pool, protocol, pool_type, fee_tier, token0, token1)

Use inline code naming consistently so downstream features don’t break.

Step 2: Normalize entities (addresses → actors)

AI models don’t think in hex strings; they learn from behavioral patterns. Your job is to convert addresses into stable “entities” where possible.

Practical labeling approach (fast → better)

Start with three tiers:

What to store for every label

label (e.g., “MEV bot”, “protocol treasury”)

confidence (0–1)

evidence (rules triggered, heuristics, links)

valid_from / valid_to (labels change!)

Wallet clustering: keep it conservative

Clustering can help (e.g., grouping addresses controlled by one operator), but it can also poison your dataset if it’s wrong.

| Entity task | What it unlocks | Common pitfall |

|---|---|---|

| Contract classification | Protocol-level features | Proxy/upgrade patterns mislead |

| Wallet clustering | Cohort flows | False merges from shared funders |

| Bot detection | Clean “organic” signals | Label drift as bots adapt |

| Treasury identification | Real yield analysis | Mixing treasury vs user fees |

Step 3: Feature engineering for DeFi (the “economic truth” layer)

This is where AI becomes useful. Your model learns from features—so design features that reflect mechanisms, not just “numbers.”

A. DEX & liquidity features (execution reality)

Useful features include:

Bold rule: If you care about tradability, model slippage under stress, not “average daily volume.”

B. Lending features (insolvency & reflexivity)

C. “Real yield” vs incentive yield (sustainability core)

DeFi yields often mix:

A practical decomposition:

gross_yield = fee_yield + incentive_yield

real_yield ≈ fee_yield - dilution_cost (where dilution cost is context-dependent, but you should at least track emissions as a percentage of market cap and circulating supply growth)

Key insight: sustainable yield is rarely the highest yield. It’s the yield that survives when incentives taper.

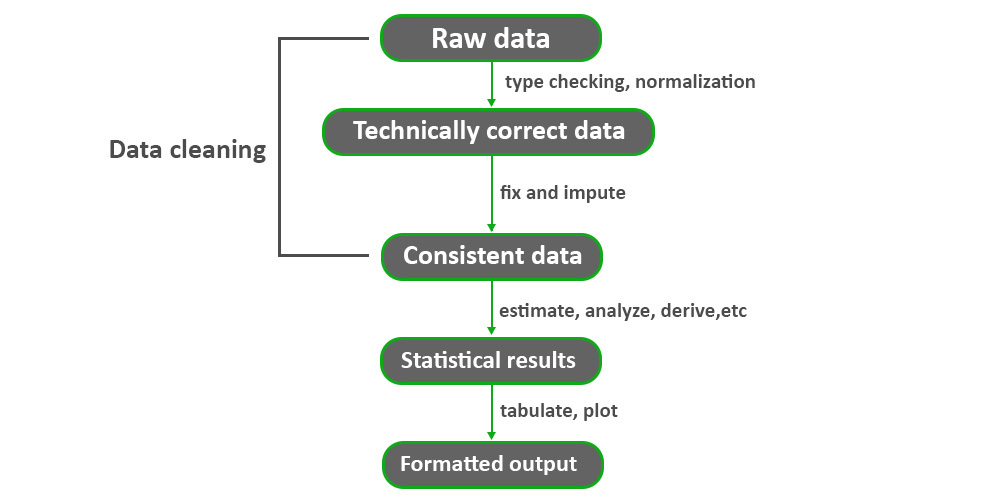

Step 4: Label the target (what you want the model to predict)

Many DeFi datasets fail because labels are vague. Good targets are specific and measurable.

Examples of model targets

Avoid label leakage

If your label uses future information (like a later exploit), ensure your features only use data available before the event. Otherwise the model “cheats.”

Step 5: Choose the right AI approach (and where LLMs fit)

Different DeFi questions map to different model families.

A. Time-series forecasting (when dynamics matter)

Use when you predict:

B. Classification & ranking (when you pick “top candidates”)

Use when you need:

C. Anomaly detection (when you don’t know the attack yet)

Useful for:

D. Graph learning (when relationships are the signal)

On-chain is naturally a graph: wallets ↔ contracts ↔ pools ↔ assets. Graph-based features can outperform flat tables for:

Where LLMs help (and where they don’t)

LLMs are great for:

LLMs are not a substitute for:

A practical hybrid:

Step 6: Evaluation and backtesting (the non-negotiable part)

DeFi is non-stationary. If you don’t evaluate carefully, your “signal” is a mirage.

A. Split by time, not randomly

Use time-based splits:

B. Track both accuracy and decision quality

In DeFi, you often care about ranking and risk, not just “accuracy.”

A simple evaluation checklist

1. Define the decision rule (e.g., “avoid if risk score > 0.7”)

2. Backtest with transaction costs & slippage assumptions

3. Run stress regimes (high gas, high volatility, liquidity crunch)

4. Compare against baselines (simple heuristics often win)

5. Store an audit trail (features, model version, snapshot blocks)

| Evaluation layer | What you measure | Why it matters |

|---|---|---|

| Predictive | AUC / error | Signal quality |

| Economic | PnL / drawdown / slippage | Real-world viability |

| Operational | latency / stability | Can it run daily? |

| Safety | false positives/negatives | Risk appetite alignment |

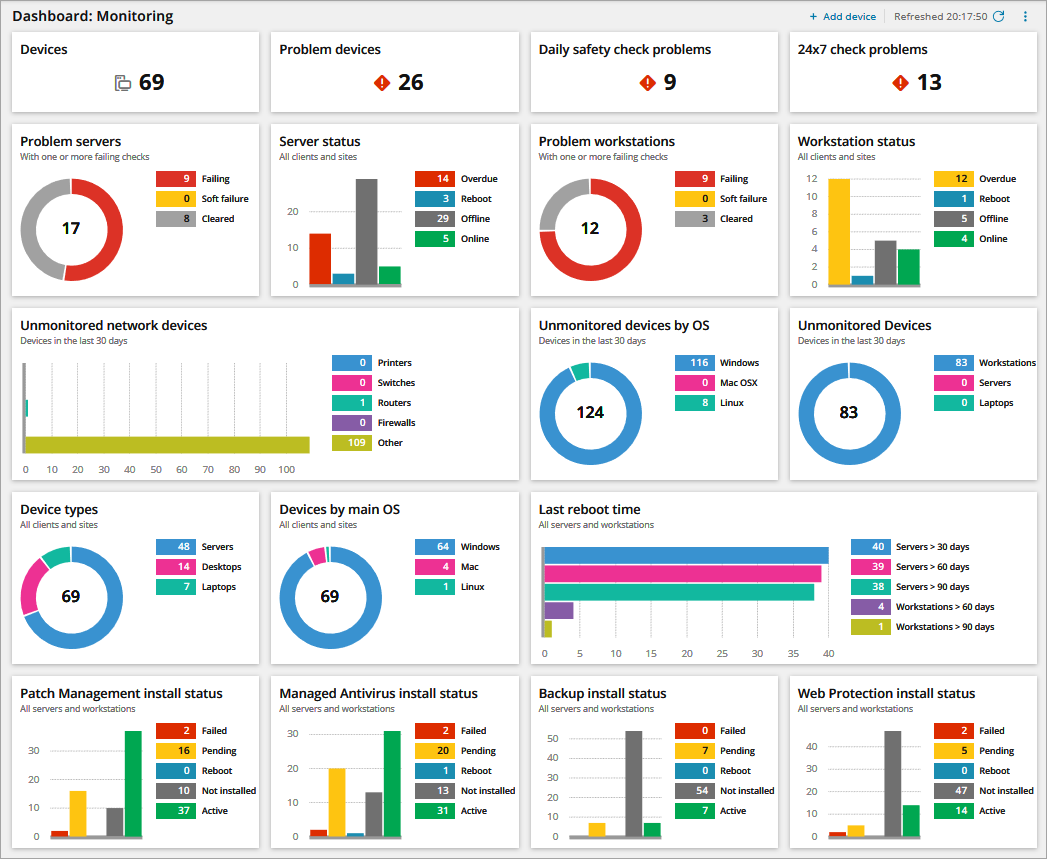

Step 7: Deploy as a loop (not a one-off report)

A real “practical workflow” is a loop you can run every day/week.

Core production loop

Monitoring that matters in DeFi

Practical rule: if you can’t explain why the model changed its score, you can’t trust it in a reflexive market.

A worked example: “Is this APY real?”

Let’s apply the workflow to a common DeFi trap: attractive yields that are mostly incentives.

Step-by-step

Compute:

fee_revenue_usd (trading fees / borrow interest)

incentives_usd (emissions + bribes + rewards)

net_inflows_usd (is TVL organic or mercenary?)

user_return_estimate (fee revenue minus IL / borrow costs where relevant)

A simple sustainability ratio:

fee_to_incentive = fee_revenue_usd / max(incentives_usd, 1)

Interpretation:

fee_to_incentive > 1.0 often indicates fee-backed yield

fee_to_incentive < 0.3 suggests incentives dominate

| Metric | What it tells you | Red flag threshold |

|---|---|---|

| feetoincentive | fee-backed vs emissions | < 0.3 |

| TVL churn | mercenary liquidity | high weekly churn |

| whale share | concentration risk | top 5 > 40% |

| MEV intensity | execution toxicity | rising sandwich rate |

| net fees per TVL | efficiency | falling trend |

Add AI:

fee_revenue_usd under multiple volume scenarios

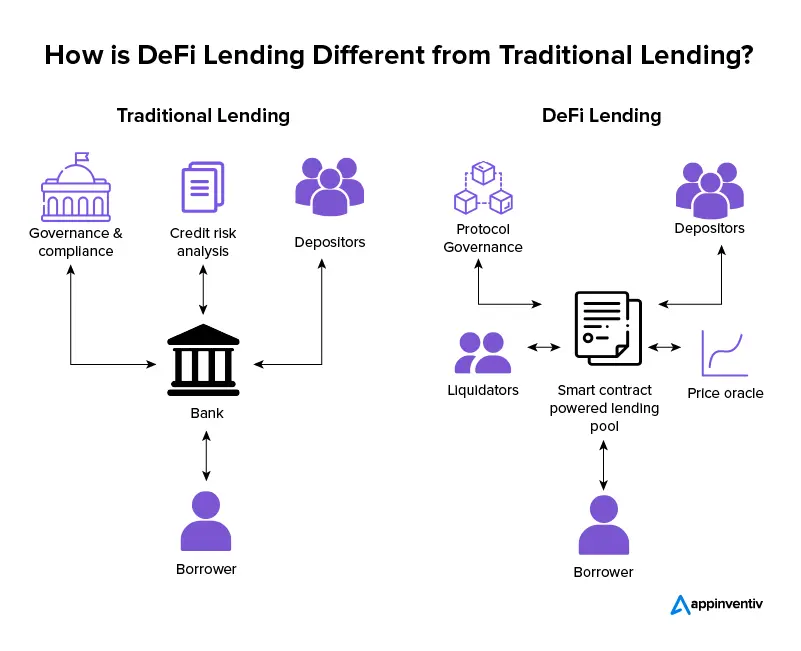

How does AI for DeFi data analysis work on-chain?

AI for DeFi data analysis works on-chain by transforming low-level blockchain artifacts (transactions, logs, traces, and state) into economic features (fees, leverage, liquidity depth, risk concentration), then learning patterns that predict outcomes you can measure (yield sustainability, liquidity shocks, insolvency risk, anomalous flows). The “AI” part is only as good as:

If you treat the workflow as a repeatable system—like the staged research approach emphasized in SimianX-style multi-step analysis—you get models that improve over time instead of brittle one-off insights.

Practical tooling: a minimal stack you can actually run

You don’t need a huge team, but you do need discipline.

A. Data layer

B. Analytics layer

C. “Research agent” layer (optional but powerful)

This is where a multi-agent mindset shines:

This is also where SimianX AI can be a helpful mental model: instead of relying on a single “all-knowing” analysis, use specialized perspectives and force explicit tradeoffs—then output a clear, structured report. You can explore the platform approach at SimianX AI.

Common failure modes (and how to avoid them)

FAQ About AI for DeFi Data Analysis: A Practical On-Chain Workflow

How to build on-chain features for machine learning in DeFi?

Start from protocol mechanics: map events to economics (fees, debt, collateral, liquidity depth). Use rolling windows, avoid leakage, and store feature definitions with versioning so you can reproduce results.

What is real yield in DeFi, and why does it matter?

Real yield is yield primarily backed by organic protocol revenue (fees/interest) rather than token emissions. It matters because emissions can fade, while fee-backed returns often persist (though they can still be cyclical).

What’s the best way to backtest DeFi signals without fooling yourself?

Split by time, include transaction costs and slippage, and test across stress regimes. Always compare to simple baselines; if your model can’t beat a heuristic reliably, it’s probably overfit.

Can LLMs replace quantitative on-chain analysis?

LLMs can speed up interpretation—summarizing proposals, extracting assumptions, organizing checklists—but they can’t replace correct event decoding, rigorous labeling, and time-based evaluation. Use LLMs to structure research, not to “hallucinate” the chain.

How do I detect incentive-driven (mercenary) liquidity?

Track TVL churn, fee-to-incentive ratios, and wallet cohort composition. If liquidity appears when incentives spike and leaves quickly afterward, treat yield as fragile unless fees independently support it.

Conclusion

AI becomes genuinely valuable in DeFi when you turn on-chain noise into a repeatable workflow: decision-first framing, reproducible datasets, conservative entity labeling, mechanism-based features, time-split evaluation, and continuous monitoring. Follow this practical on-chain loop and you’ll produce analysis that’s comparable across protocols, resilient to regime changes, and explainable to teammates or stakeholders.

If you want a structured way to run staged, multi-perspective research (and to translate complex data into clear, shareable outputs), explore SimianX AI as a model for organizing rigorous analysis into an actionable workflow.