AI stock analysis vs human research: time, cost, accuracy

If you’ve ever tried to decide whether AAPL, TSLA, or NVDA is “cheap” or “expensive,” you already know the real challenge: stock research is a race against time. News hits mid-session, filings are dense, and price action moves faster than any one person can read. This is why AI stock analysis vs human research has shifted from a philosophical debate to a practical workflow decision for investors and teams. Platforms like SimianX AI bring multi-agent analysis, debate, and downloadable PDF reports to the process—changing what “research coverage” can look like for a small team or solo investor. (S5)

What are we really comparing: time, cost, and accuracy?

Most “AI vs human” debates fall apart because they compare different things. To make this comparison fair, define three measurable outcomes:

The best comparison is not “Who is smarter?” but “Who gets you to a verifiable decision faster, cheaper, and with fewer avoidable errors?”

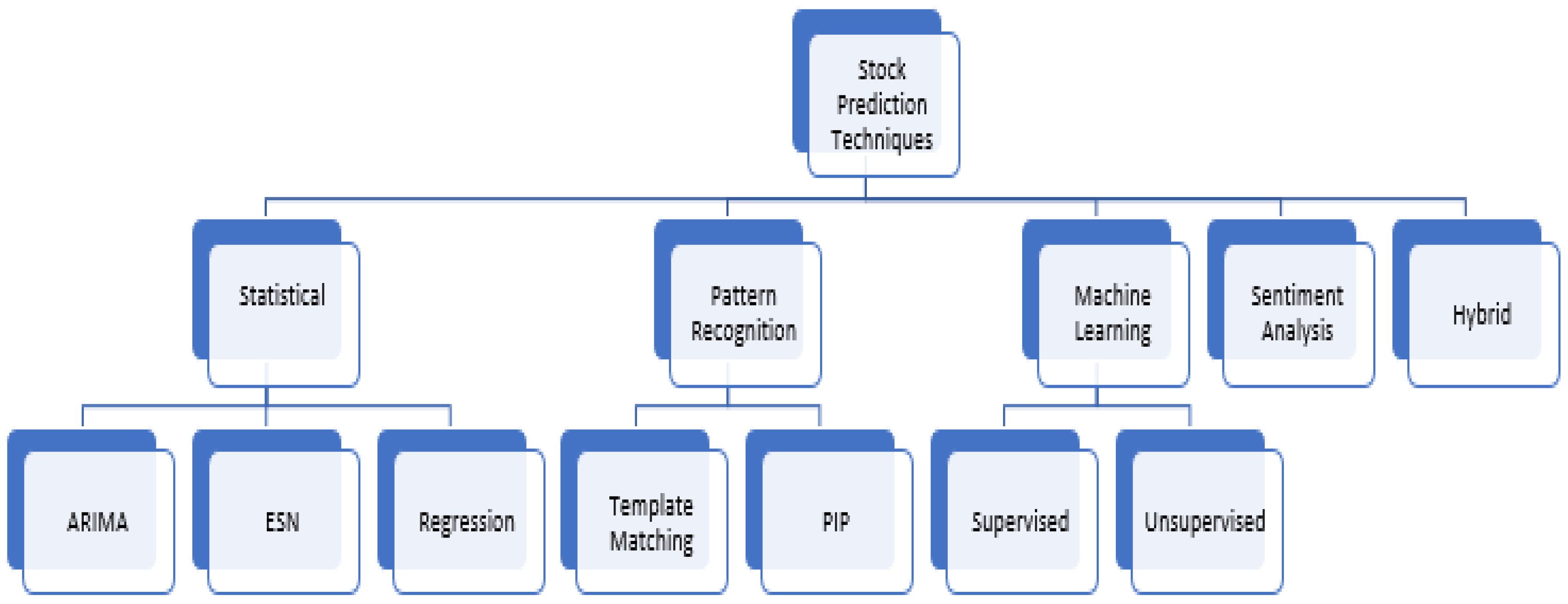

A quick taxonomy of stock-research tasks

Not all “analysis” is forecasting. In real workflows, research breaks into three buckets:

1. Information extraction (e.g., pulling revenue, margins, guidance, and risk factors from a 10-Q)

2. Interpretation and synthesis (e.g., connecting filings, macro context, and sentiment into a thesis)

3. Decision support (e.g., portfolio sizing, entry/exit plans, downside scenarios)

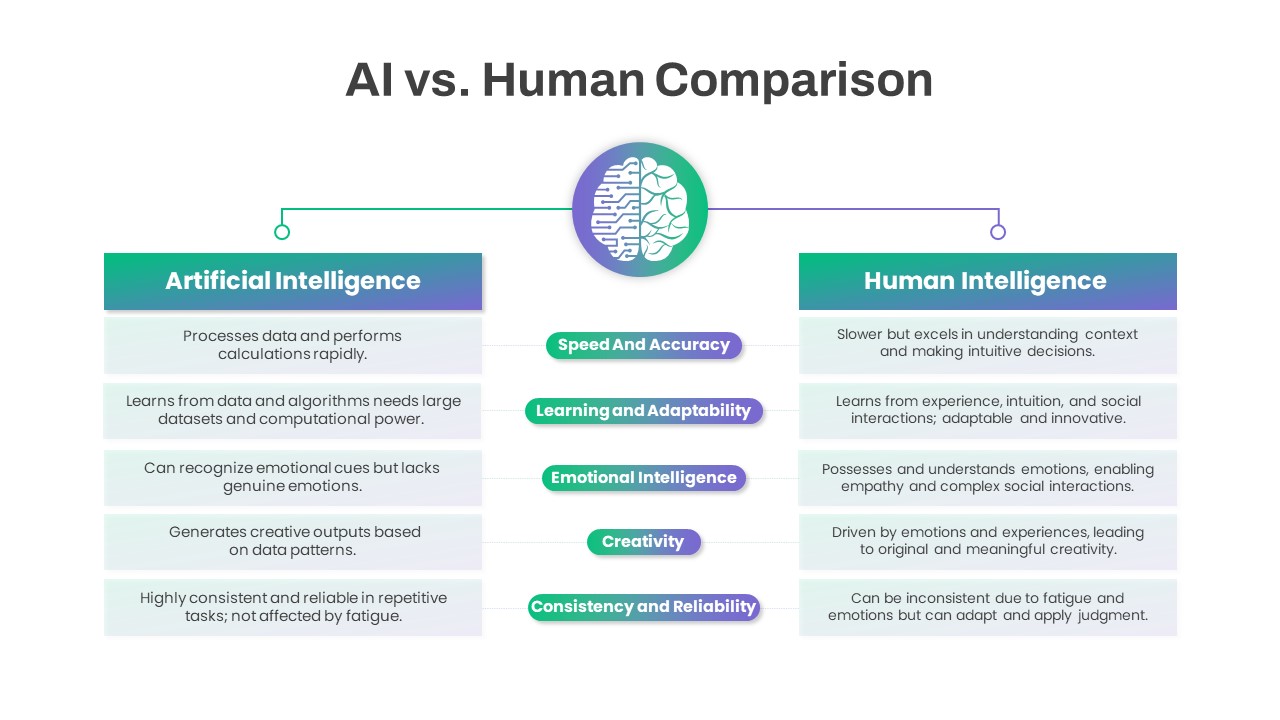

AI and humans often excel in different buckets—so your evaluation should score each separately.

Time: the real advantage is “time-to-verified insight”

When people say AI is “faster,” they usually mean time-to-first-answer. In investing, what matters is time-to-verified insight—how quickly you can reach a conclusion you can defend.

Where AI tends to win on time

AI systems are strong at compressing reading and cross-referencing:

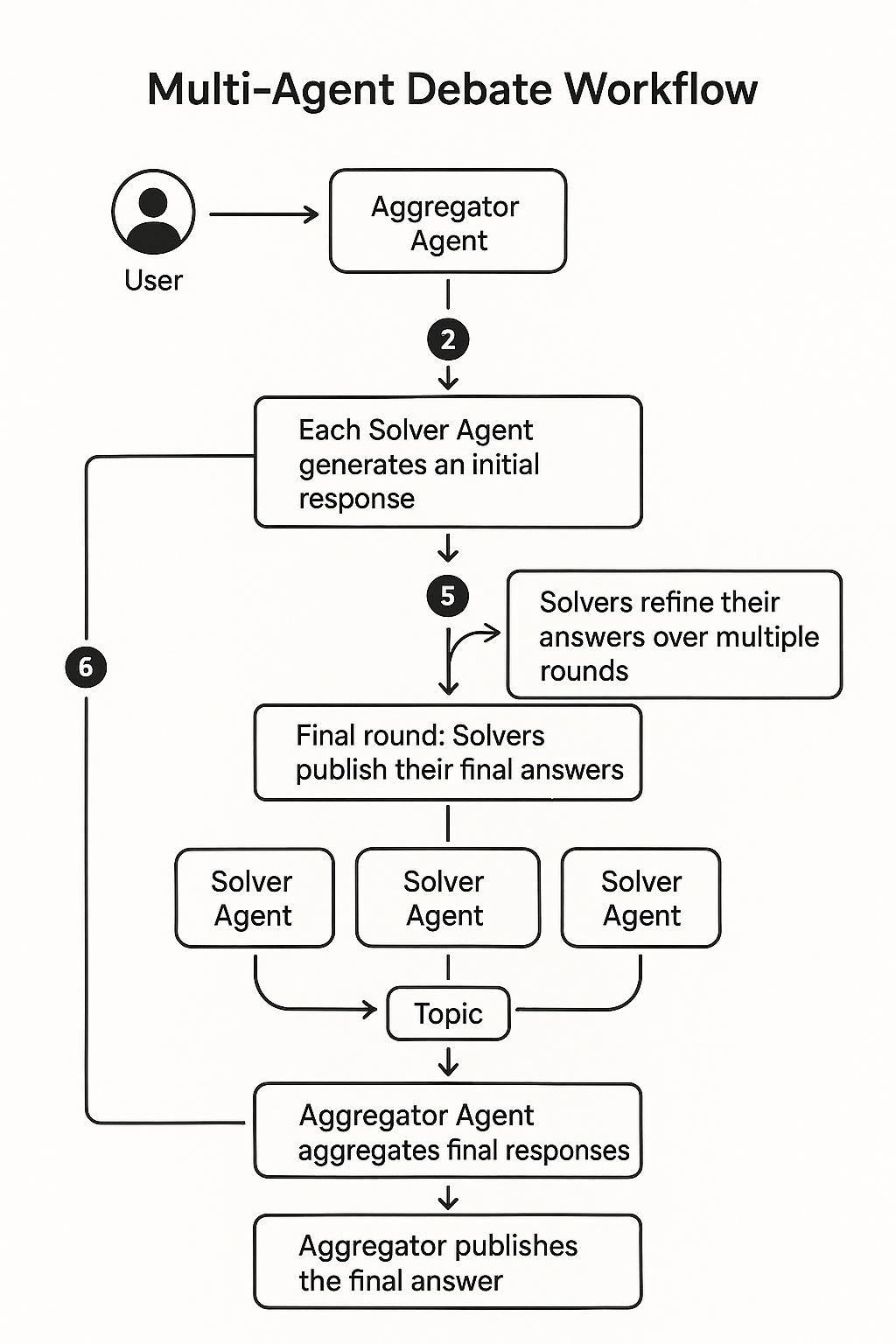

In a multi-agent setup, parallelization matters: multiple specialized agents can process different angles simultaneously (fundamentals, technicals, sentiment, timing), then reconcile conflicts into a single decision-ready brief.

Where humans still win on time (surprisingly)

Humans can be faster when the job is:

Humans also shortcut with experience: a seasoned analyst may spot a “red flag” in minutes that an AI will only surface if prompted correctly.

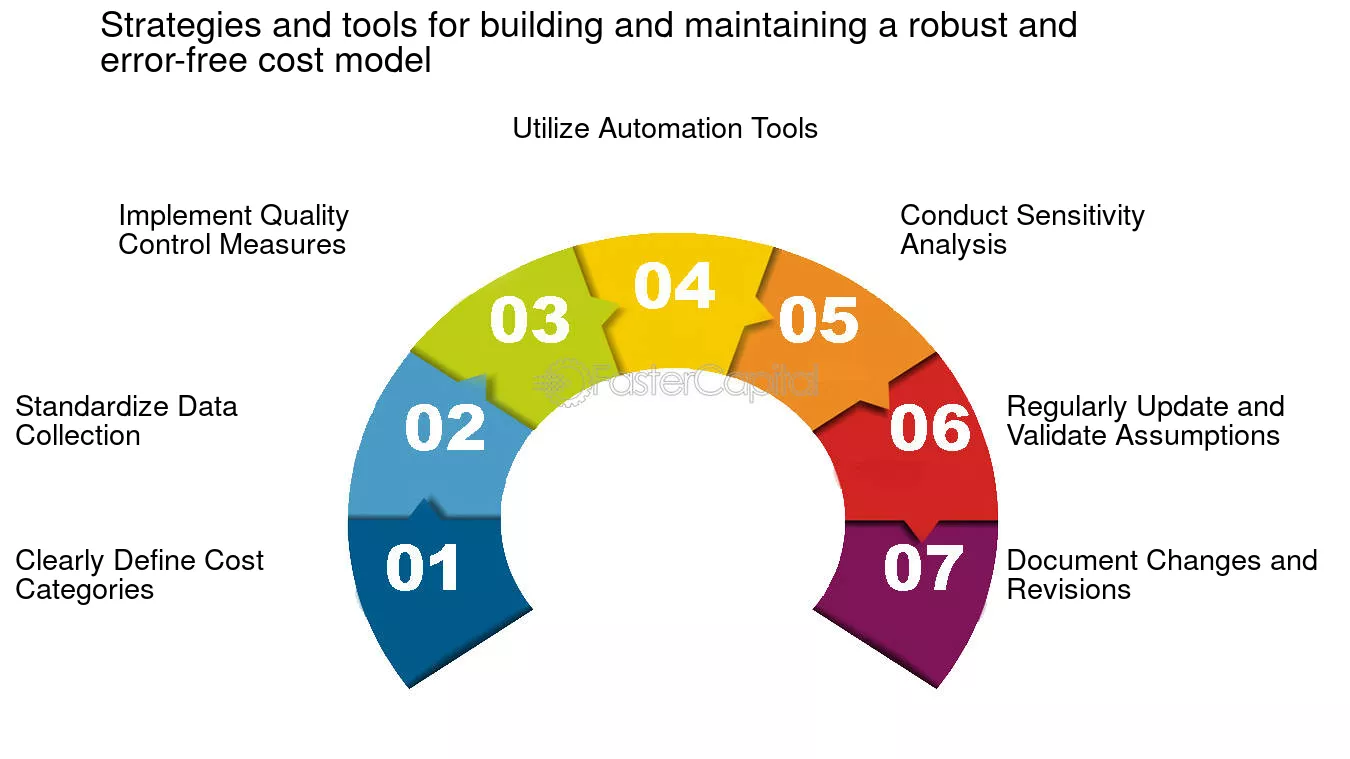

Cost: don’t forget the “error tax”

Cost is not only what you pay upfront. A clean cost model includes three layers:

A simple way to model it:

total_cost = tool_cost + (hours × hourly_rate) + (error_probability × error_impact)

Typical cost structures

Human research cost scales with headcount. If you need coverage on 100+ tickers, you either narrow the universe, hire more analysts, or accept slower updates.

AI research cost scales with usage (queries, reports, data). It can be dramatically cheaper per ticker once the pipeline is set up, especially for routine monitoring and standardized outputs (like a one-page brief or a PDF research report).

The cheapest research is not “AI-only.” It’s research that reduces the error tax by combining machine speed with human verification.

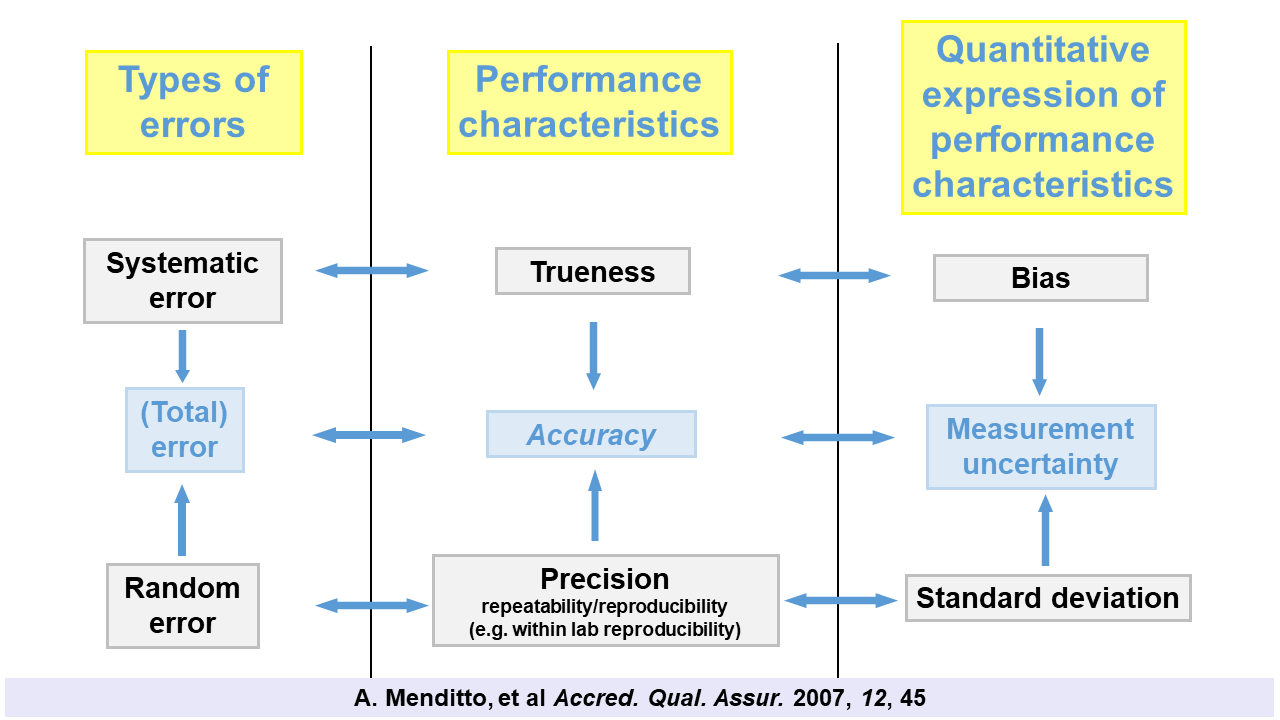

Accuracy: define it before you measure it

Accuracy is the trickiest dimension, because it depends on the question.

Three kinds of accuracy you should measure

| Accuracy type | What it means | Example metric | Why it matters |

|---|---|---|---|

| Factual accuracy | Correct numbers and statements | % of extracted fields correct | Prevents “wrong inputs” |

| Analytical accuracy | Correct reasoning given the facts | rubric scoring, consistency checks | Prevents plausible nonsense |

| Predictive accuracy | Correct future-oriented calls | hit rate, calibration, risk-adjusted return | Prevents overconfident forecasts |

Factual accuracy is easiest to test: you can check whether the model pulled the right figure from a filing.

Predictive accuracy is hardest: markets are noisy, and a correct narrative can still lose money.

Why AI can look accurate when it isn’t

Generative models can produce confident-sounding explanations. If you don’t enforce citations, cross-checks, and guardrails, the output can drift into:

That is why any serious evaluation should include verification steps, not just final answers.

Is AI stock analysis vs human research more accurate for investors?

The honest answer is: sometimes—on specific tasks—and only under disciplined evaluation.

AI often matches or beats humans on:

Humans often outperform AI on:

The most reliable approach in real workflows is hybrid: use AI for breadth and speed, and humans for depth, validation, and decision accountability.

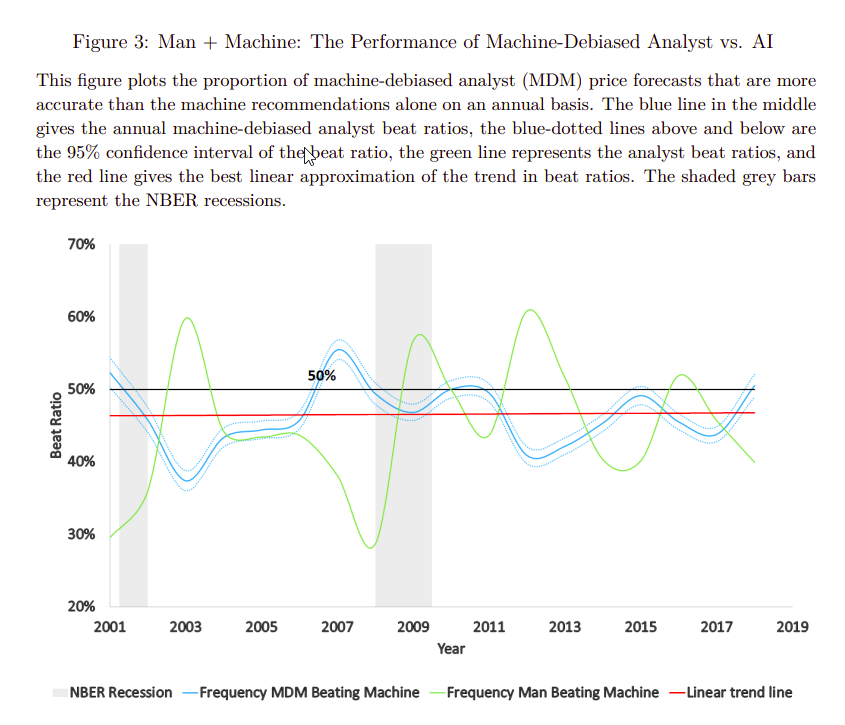

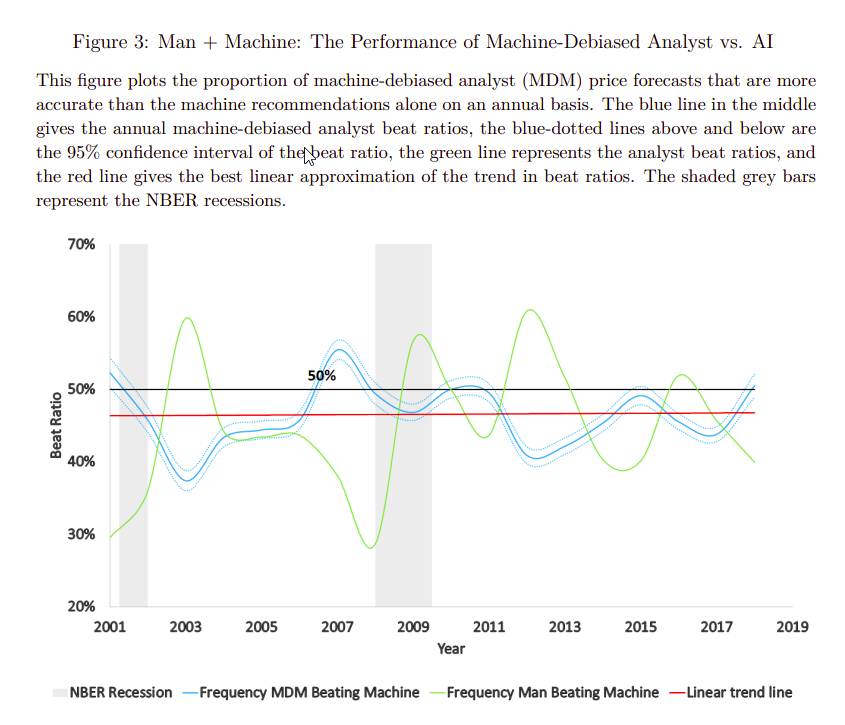

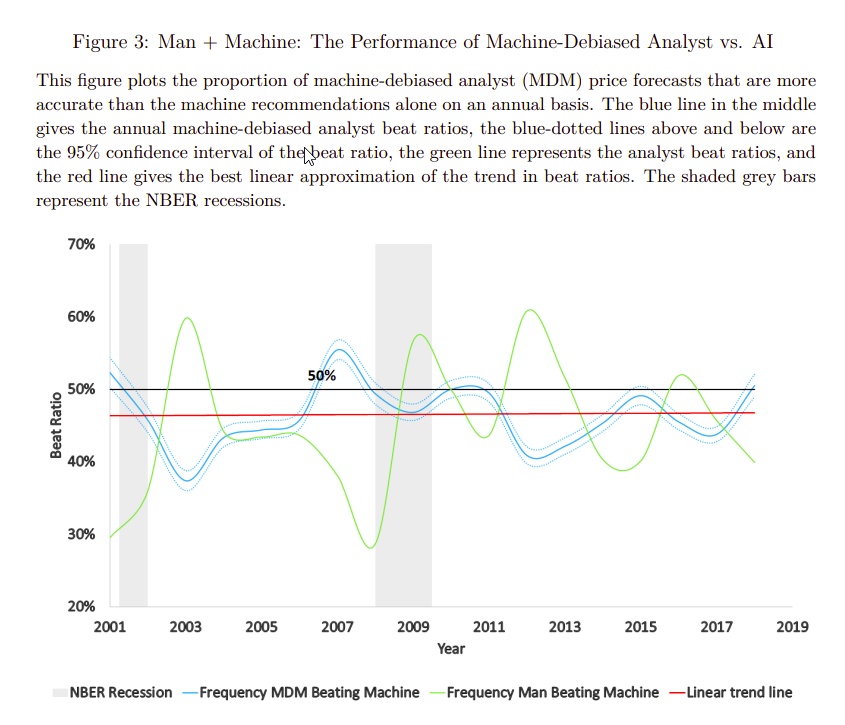

Academic research has found cases where “AI analysts” outperform many human analysts on specific forecasting tasks, but results vary by setup and dataset. (S1, S2)

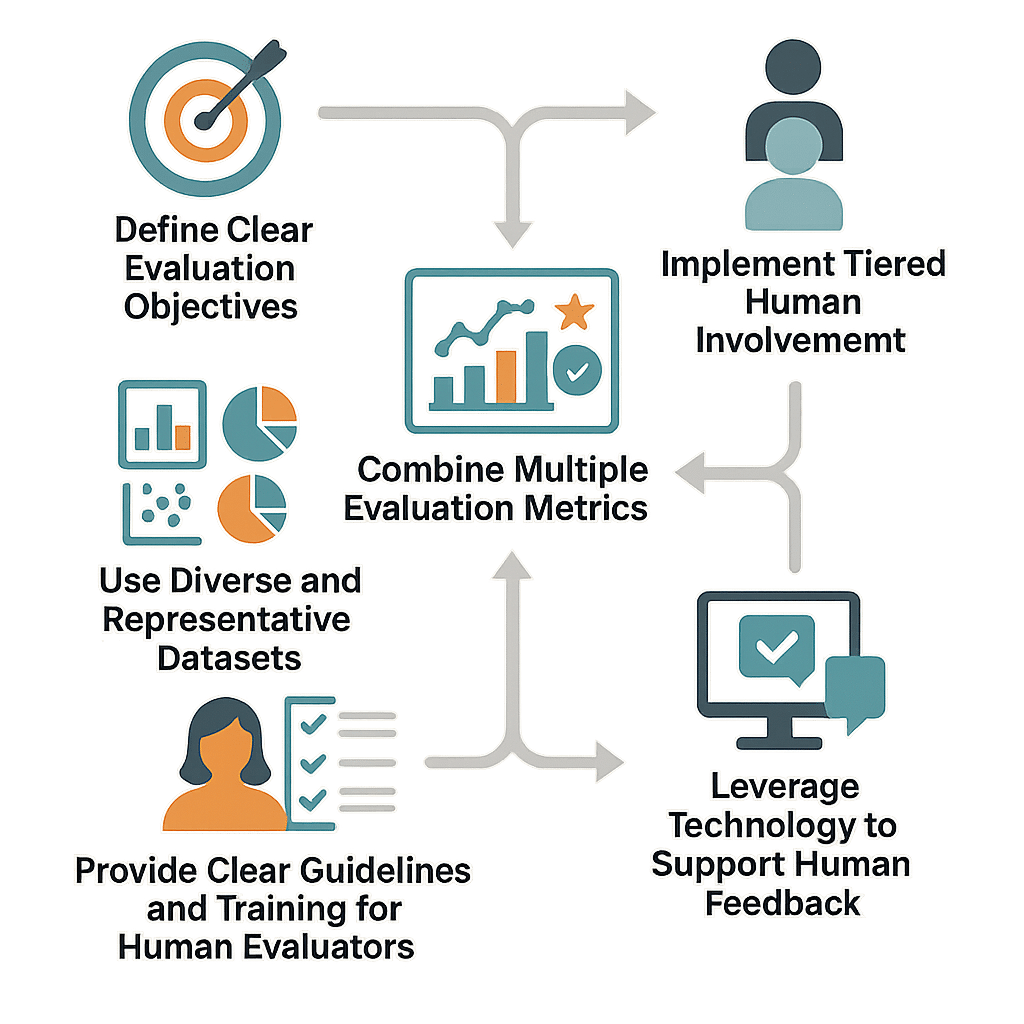

A practical research design to compare AI and humans fairly

If you want a true “research” comparison, run a controlled evaluation instead of relying on anecdotes.

Step 1: choose comparable tasks

Pick tasks that both sides can reasonably do:

1. Extract 20 key fields from a 10-Q (revenue, gross margin, cash flow, guidance, risks)

2. Summarize an earnings call into catalysts and risks (max 400 words)

3. Produce a one-page investment memo with a base/bull/bear scenario

4. Make a directional call over a fixed horizon (e.g., 1 month) with confidence

Step 2: define ground truth

Step 3: lock information access and time budgets

To be fair, both should have:

Otherwise, “human research” becomes “human + expensive terminals + weeks of calls,” while “AI research” becomes “AI + cherry-picked prompts.”

Step 4: score with multiple metrics

Use a scorecard that separates:

And add “operational” metrics:

Example comparison: 20-ticker monthly coverage (illustrative)

To make the trade-offs concrete, imagine you maintain a watchlist of 20 stocks and do a monthly refresh.

Human-only workflow (typical)

AI-first workflow (typical)

The point is not the exact numbers (they vary). The point is where time moves:

If AI saves you 30 hours, spend 10 of them on verification and 20 on better risk management—not on more trades.

How SimianX AI fits into a hybrid workflow

A strong hybrid process needs two things: parallel coverage and auditability.

SimianX AI is built around multi-agent stock analysis: different agents analyze in parallel, debate, and converge on a clearer decision. The output isn’t only a chat response—it’s also a professional PDF report you can share, archive, and review later for post-mortems and learning. (S5)

What this looks like in practice

A repeatable 7-step workflow you can use today

1. Start with breadth: run a fast AI scan across your watchlist.

2. Pick 3 focus names: prioritize by catalysts, volatility, or valuation gaps.

3. Verify the numbers: cross-check 5–10 key fields in filings and transcripts.

4. Stress-test the thesis: ask for the strongest bear case and what would falsify it.

5. Translate into rules: define entry, exit, and position sizing (not just “buy/sell”).

6. Write a one-page memo: save the thesis, assumptions, and triggers.

7. Monitor with alerts: set a cadence (weekly) and escalation rules (immediate on major events).

What “multi-agent debate” changes

Single-model tools often give you one narrative. Multi-agent debate is useful because it can surface disagreements early:

When these collide, you get something closer to a real investment committee—without waiting days for a meeting.

Decision matrix: when to trust AI, when to rely on humans

Use this as a quick operating guide:

| Situation | Prefer AI-first | Prefer human-first | Best hybrid move |

|---|---|---|---|

| Many tickers, low stakes | ✅ | ❌ | AI scan + light verification |

| One ticker, high stakes | ⚠️ | ✅ | AI draft + deep human diligence |

| Dense filings / transcripts | ✅ | ⚠️ | AI extract + human spot-check |

| Regime change / new laws | ⚠️ | ✅ | Human interpretation + AI evidence gather |

| Repetitive monitoring | ✅ | ❌ | AI alerts + human escalation rules |

Limitations and common pitfalls in AI-vs-human comparisons

To keep your study honest, watch out for these pitfalls:

Also note that independent evaluations of general-purpose AI systems on finance tasks have found substantial error rates—another reason to prioritize verification and domain-specific tooling rather than “chat and trust.” (S4)

FAQ About AI stock analysis vs human research

How to evaluate AI stock analysis accuracy without backtesting?

Start with factual accuracy: pick 10–20 fields from filings and check them manually. Then test reasoning quality with a rubric (does it cite evidence, mention risks, avoid leaps?). Finally, track a small set of forecasts over time and measure calibration (were “high confidence” calls actually more accurate?).

Is AI stock research worth it for beginners?

Yes—if it helps you build a consistent process and avoid information overload. The key is to treat AI as an assistant, not an oracle: verify a handful of numbers, write down assumptions, and use simple risk rules.

What is the best way to combine human and AI stock research?

Use AI for breadth (scanning, summarizing, monitoring) and humans for depth (verification, context, decision accountability). A good rule is: AI drafts, humans validate, the process decides.

Can multi-agent AI replace a professional analyst team?

For standardized tasks and broad coverage, it can reduce the need for manual work. But for nuanced judgment, novel situations, and accountability to clients or regulators, humans remain essential—especially when the cost of mistakes is high.

Conclusion

AI is changing the economics of investing research, but the winner is rarely “AI-only” or “human-only.” The best outcomes come from hybrid research systems that use AI to compress time and cost, while humans guard accuracy with verification, context, and decision discipline.

If you want to operationalize that approach, explore SimianX AI to run multi-agent analysis, capture debates, and generate a professional report you can learn from over time.

Disclaimer: This content is for educational purposes only and is not investment advice.