AI to Address Delayed and Inaccurate Crypto Price Data in Trading Risk Management

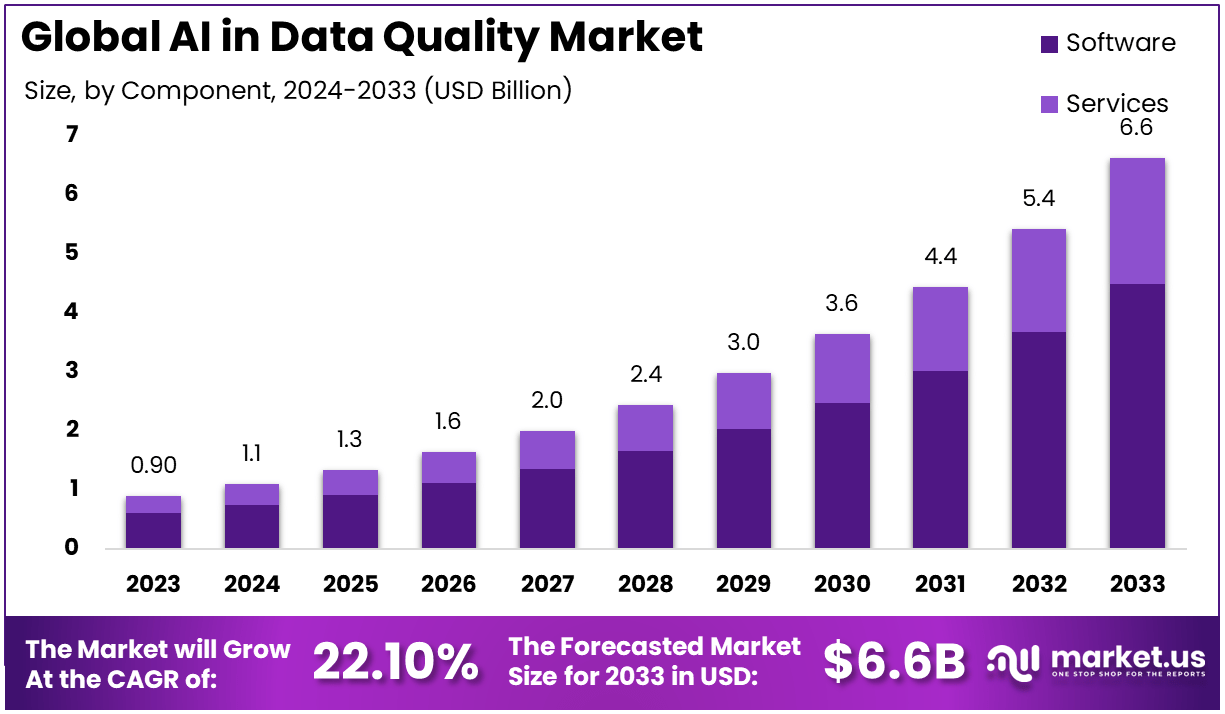

Delayed and inaccurate price data is a silent risk multiplier in crypto trading: it turns good strategies into bad fills, misprices margin, and creates false comfort in dashboards. This research explores AI to address delayed and inaccurate crypto price data by detecting staleness, correcting outliers, and enforcing “trust-aware” risk controls that adapt when market data quality degrades. We also outline how SimianX AI can serve as an operating layer for market-data QA, monitoring, and action—so risk decisions are based on validated prices, not hopeful ones.

Why price delays and inaccuracies are common in crypto

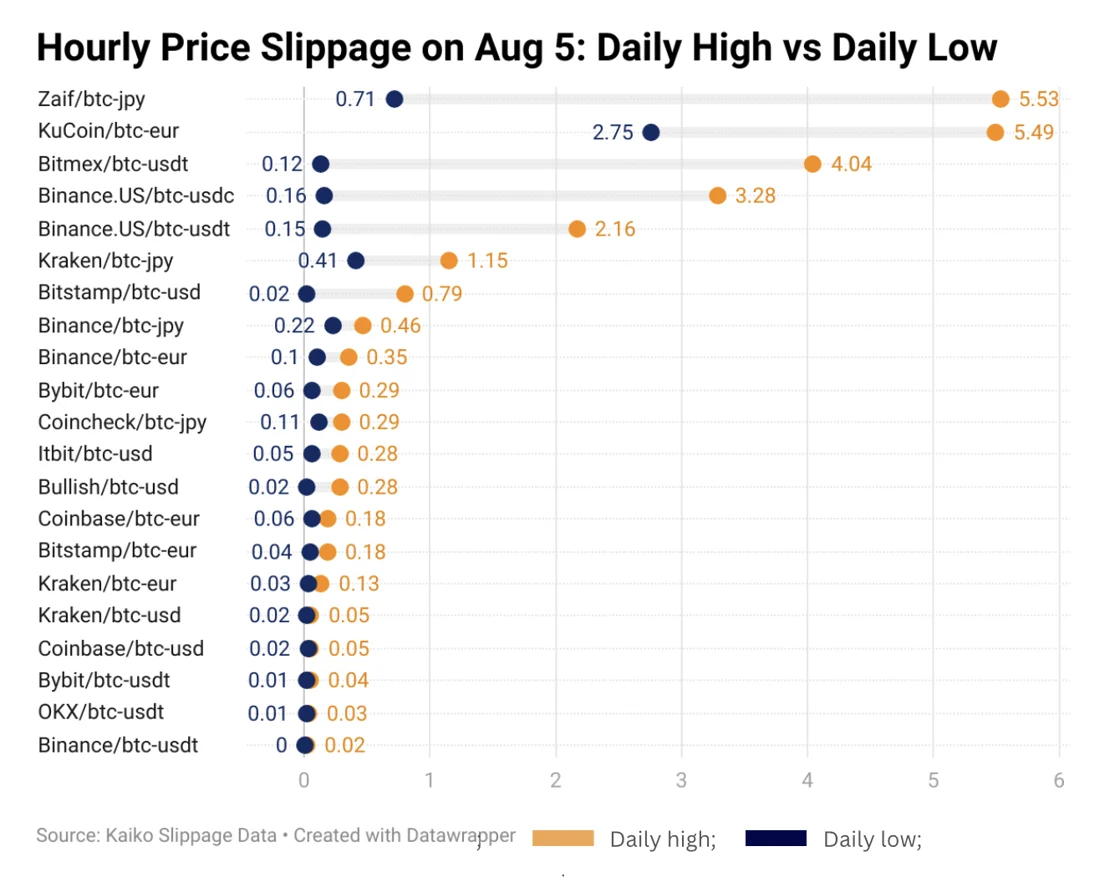

Crypto market data looks “real-time,” but it often isn’t. The ecosystem has fragmented venues, heterogeneous APIs, uneven liquidity, and inconsistent timestamping. These factors create measurable delays and distortions that traditional risk systems—built for cleaner market data—don’t always handle well.

1) Venue fragmentation and inconsistent “truth”

Unlike a single consolidated tape, crypto prices are spread across:

Even when venues quote the “same” symbol, the effective price differs due to fees, spread, microstructure, and settlement constraints.

2) API latency, packet loss, and rate limits

A WebSocket feed can degrade silently—dropping messages or reconnecting with gaps. REST snapshots may arrive late or be rate-limited during volatility. The result: stale best bid/ask, lagging trades, and incomplete order-book deltas.

3) Clock drift and timestamp ambiguity

Some feeds provide event timestamps (exchange time), others provide receipt timestamps (client time), and some provide both inconsistently. If clocks are not disciplined (e.g., NTP/PTP), your “latest” price can be older than you think—especially when comparing sources.

4) Low-liquidity distortions and microstructure noise

Thin books, sudden spread widening, and short-lived quotes can create:

5) Oracle update cadence and DeFi-specific issues

On-chain pricing introduces additional failure modes: oracle update intervals, delayed heartbeats, and manipulation risk in illiquid pools. Even if your trades are off-chain, risk systems often rely on blended indices influenced by on-chain signals.

In crypto, “price” is not a single number—it’s a probabilistic estimate conditioned on venue quality, timeliness, and liquidity.

How stale or wrong prices break risk management

Risk is a function of exposure × price × time. When price or time is wrong, the entire chain of controls becomes brittle.

Key risk impacts

The compounding effect during volatility

When markets move fast, data quality often worsens (rate limits, reconnects, bursty updates). That’s precisely when your risk system needs to be most conservative.

Bolded takeaway: Data quality is a first-class risk factor. Your controls should tighten automatically when the price feed becomes less trustworthy.

A practical framework: treat market data as a scored sensor

Instead of assuming price data is correct, treat each source as a sensor producing:

1) a price estimate, and

2) a confidence score.

The four dimensions of market-data quality

1. Timeliness: how old is the last reliable update? (staleness in milliseconds/seconds)

2. Accuracy: how plausible is the price relative to other sources and market microstructure?

3. Completeness: are key fields missing (book levels, trade prints, volumes)?

4. Consistency: do deltas reconcile with snapshots, and do timestamps move forward correctly?

The output risk systems should consume

price_estimate (e.g., robust mid, index, or mark)

confidence (0–1)

data_status (OK / DEGRADED / FAIL)

reason_codes (stalefeed, outlierprint, missingdepth, clockskew, etc.)

This turns “data problems” into machine-actionable signals.

AI methods to detect delays and inaccuracies

AI doesn’t replace engineering fundamentals (redundant feeds, time sync). It adds a layer of adaptive detection that learns patterns, identifies anomalies, and generates confidence scores.

1) Staleness detection beyond simple timers

A naive rule like “if no update in 2 seconds, mark stale” is insufficient. AI can model expected update behavior by:

Approach:

Useful signals:

2) Outlier and manipulation detection (prints and quotes)

Outliers can be legitimate (gap moves) or erroneous (bad tick, partial book). AI can distinguish with context.

Approaches:

mid, spread, top size, trade count, volatility, order book imbalance

3) Cross-venue reconciliation as probabilistic consensus

Instead of choosing one “primary” exchange, use an ensemble:

This is especially effective when a single venue goes “off-market” briefly.

4) Nowcasting to compensate for known delays

If you know a source lags by ~300ms, you can “nowcast” a better estimate using:

Nowcasting must be conservative: it should increase uncertainty rather than create false precision.

5) Confidence scoring and calibration

A confidence score is only useful if it correlates with actual error. Calibration methods:

The goal is not perfect prediction. The goal is risk-aware behavior when your data is imperfect.

System architecture: from raw feeds to risk-grade prices

A robust design separates ingestion, validation, estimation, and action.

Reference pipeline (conceptual)

WebSocket + REST snapshots)

data_status and confidence

mark_price and band

mark_price + confidence

Why “event-time vs processing-time” matters

If your pipeline uses processing-time, a network delay looks like the market slowed down. Event-time processing preserves the real sequence and allows accurate staleness scoring.

Minimum viable redundancy checklist

Step-by-step: implementing AI-driven data quality controls

This is a practical roadmap you can apply in production.

1. Define data SLAs by asset class

- max_staleness_ms per symbol/venue

- acceptable divergence bands vs. consensus

- minimum fields required (best bid/ask, depth, trades)

2. Instrument the feed

- log message counts, sequence gaps, reconnects

- store both exchange timestamps and receipt timestamps

- compute rolling health metrics

3. Build baseline rules

- hard staleness cutoff

- invalid values (negative prices, zero spread in impossible contexts)

- sequence-gap detection for books

4. Train anomaly detectors

- start simple: robust stats + Isolation Forest

- add multivariate models as data grows

- segment by symbol liquidity and venue behavior

5. Create a confidence score

- combine: timeliness + completeness + divergence + model anomaly probability

- ensure calibration: confidence correlates with actual error

6. Deploy “gating” in risk + execution

- if confidence falls: widen slippage, reduce size, switch reference price, or halt

- keep a human-readable reason code for audits

7. Monitor and iterate

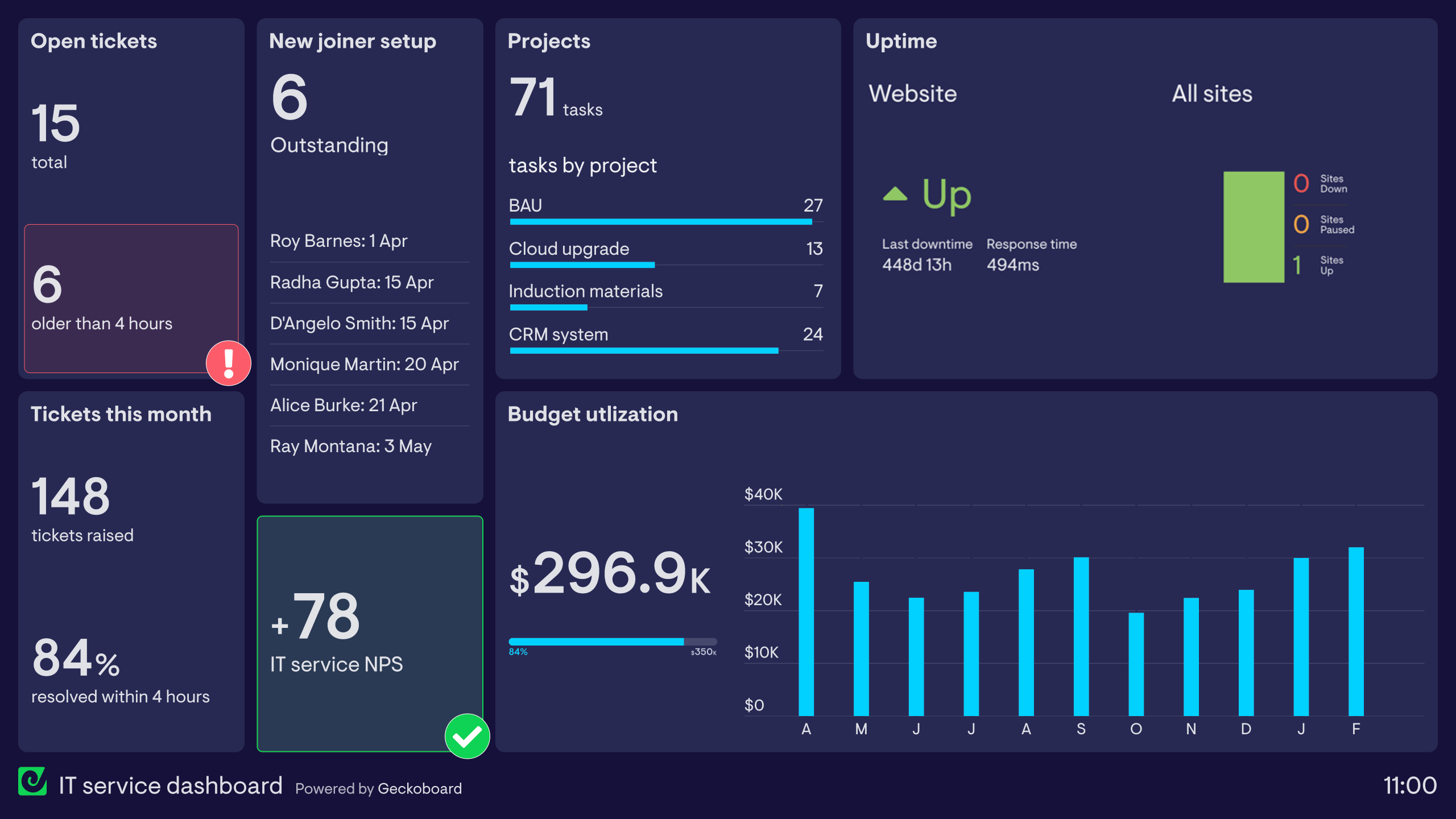

- dashboards: confidence over time, venue reliability, regime shifts

- post-incident reviews: was the system conservative enough?

What to do when data is degraded: fail-safes that actually work

AI detection is only half the story. The other half is how your system responds.

Recommended control actions by severity

- lower max leverage

- reduce order size

- widen limit bands

- require extra confirmations (2-of-3 sources)

- kill switch for strategies

- move to “safe mode” (only reduce exposure, no new risk)

- freeze marks and trigger manual review if needed

A simple decision table

| Condition | Example signal | Recommended action |

|---|---|---|

| Mild staleness | staleness < 2s but rising | widen slippage, reduce size |

| Divergence | venue price deviates > X bp | down-weight venue, use consensus |

| Book gaps | missing deltas / sequence breaks | force snapshot, mark degraded |

| Clock skew | exchange time jumps backward | quarantine feed, alert |

| Full outage | no reliable source | halt new risk, unwind cautiously |

Bolded principle: When data quality drops, your system should become more conservative automatically.

Execution risk management: tie price confidence to trading behavior

Delayed or wrong prices hit execution first. Risk teams often focus on portfolio metrics, but micro-level controls prevent blowups.

Practical controls linked to confidence

confidence (lower confidence → higher caution, or lower participation)

A “trust-aware” order placement rule

This avoids the common failure mode: “the model thought price was X, so it traded aggressively.”

DeFi and oracle considerations (even for CEX traders)

Many desks consume blended indices that incorporate on-chain signals or rely on oracle-linked marks for risk. AI can help here too:

If you trade perps, funding and basis can cause persistent differences—AI should learn expected basis behavior so it doesn’t treat normal basis as an anomaly.

Where SimianX AI fits in the workflow

SimianX AI can be positioned as an analysis and control layer that helps teams:

A practical approach is to use SimianX AI for:

Internal link: SimianX AI

A realistic case study (hypothetical)

Scenario: A fast-moving altcoin spikes on Exchange A. Exchange B’s feed silently degrades: WebSocket stays connected but stops delivering depth updates. Your strategy trades on Exchange B using a stale mid price.

Without AI controls

With AI + confidence gating

- reduces size, widens limits, requires 2-of-3 confirmation

In production, “failing safely” matters more than being right all the time.

FAQ About AI to address delayed and inaccurate crypto price data

What causes inaccurate crypto price feeds during high volatility?

High volatility amplifies rate limits, reconnects, message bursts, and thin-book effects. A single off-market print can distort last-trade marks, while missing book deltas can freeze your mid price.

How to detect stale crypto prices without false alarms?

Use a hybrid approach: simple timers plus models that learn expected update rates per symbol and venue. Combine staleness with divergence and completeness signals to avoid triggering on naturally slower markets.

Best way to reduce crypto oracle latency risk in a trading stack?

Don’t rely on a single oracle or a single venue. Build a consensus estimator across sources, track oracle update behavior, and enforce conservative modes when the oracle lags or diverges materially.

Should I down-weight a venue permanently if it produces outliers?

Not necessarily. Venue quality is regime-dependent. Use adaptive reliability scoring so a venue can recover trust after a period of stability, while still being penalized during repeated failures.

Can AI fully replace deterministic validation rules?

No. Deterministic checks catch obvious invalid states and provide clear auditability. AI is best used to detect subtle degradation, learn patterns, and produce calibrated confidence scores on top of rules.

Conclusion

Using AI to address delayed and inaccurate crypto price data turns market data from an assumed truth into a measured, scored input that your risk system can reason about. The winning pattern is consistent: multi-source ingestion + rigorous time handling + AI detection + confidence-driven controls. When your data becomes uncertain, your trading and risk posture should automatically become more conservative—reducing position sizes, widening bands, or halting new risk until the feed recovers.

If you want a practical, end-to-end workflow to validate prices, score confidence, monitor anomalies, and operationalize response playbooks, explore SimianX AI and build a risk stack that stays resilient even when the data doesn’t.