Artificial Intelligence vs Artificial Cryptography: A Comparison of Time and Accuracy

If you search for “artificial intelligence vs artificial cryptography time and accuracy comparison”, you’ll quickly notice something: people use the same words—time and accuracy—to mean very different things. In AI, “accuracy” often means a percentage score on a dataset. In cryptography, “accuracy” is closer to correctness (does encryption/decryption always work?) and security (can an adversary break it under realistic assumptions?). Mixing these definitions leads to bad conclusions and, worse, bad systems.

This research-style guide gives you a practical way to compare Artificial Intelligence (AI) and Artificial Cryptography (we’ll define it as human-designed cryptographic constructions and cryptography-inspired benchmark tasks) using a shared language: measurable time costs, measurable error, and measurable risk. We’ll also show how a structured research workflow—like the kind you can document and operationalize in tools such as SimianX AI—helps you avoid “fast but wrong” outcomes.

First: What do we mean by “Artificial Cryptography”?

The phrase “Artificial Cryptography” isn’t a standard textbook category, so we’ll define it clearly for this article to avoid confusion:

This matters because the “winner” depends on what you’re comparing:

The core mistake is comparing AI’s average-case accuracy to cryptography’s worst-case security goals. They are not the same objective.

Time and accuracy are not single numbers

To make the comparison fair, treat “time” and “accuracy” as families of metrics, not one score.

Time: which clock are you using?

Here are four “time” metrics that frequently get mixed up:

Accuracy: what kind of correctness do you need?

In AI, accuracy often means “how often predictions match labels.” In cryptography, correctness and security are framed differently:

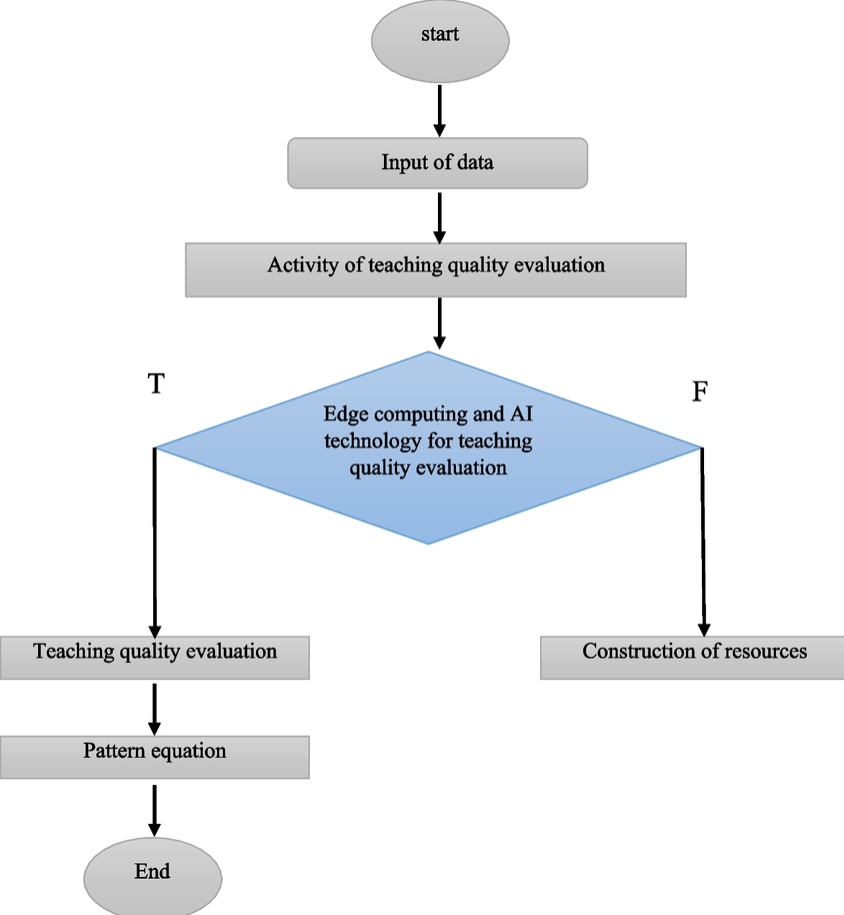

A shared comparison table

| Dimension | AI systems (typical) | Cryptography systems (typical) | What to measure in your study |

|---|---|---|---|

| Goal | Optimize performance on data | Resist adversaries, guarantee properties | Define the threat model and task |

| “Accuracy” | accuracy, F1, calibration | correctness + security margin | error rate + attack success rate |

| Time focus | T_train + T_infer | T_build + T_audit | end-to-end time-to-decision |

| Failure mode | confident wrong answer | catastrophic break under attack | worst-case impact + likelihood |

| Explainability | optional but valuable | often required (proofs/specs) | audit trail + reproducibility |

![table visualization placeholder]()

Where AI tends to win on time

AI tends to dominate T_infer for analysis tasks and T_build for workflow automation—not because it guarantees truth, but because it compresses labor:

In security work, AI’s biggest time advantage is often coverage: it can “read” or scan far more than a human team in the same wall-clock time, then produce candidate leads.

But speed is not safety. If you accept outputs without verification, you’re exchanging time for risk.

Practical rule

If the cost of being wrong is high, your workflow must include T_audit by design—not as an afterthought.

Where cryptography tends to win on accuracy (and why that’s a different word)

Cryptography is engineered so that:

That framing changes what “accuracy” means. You don’t ask:

You ask:

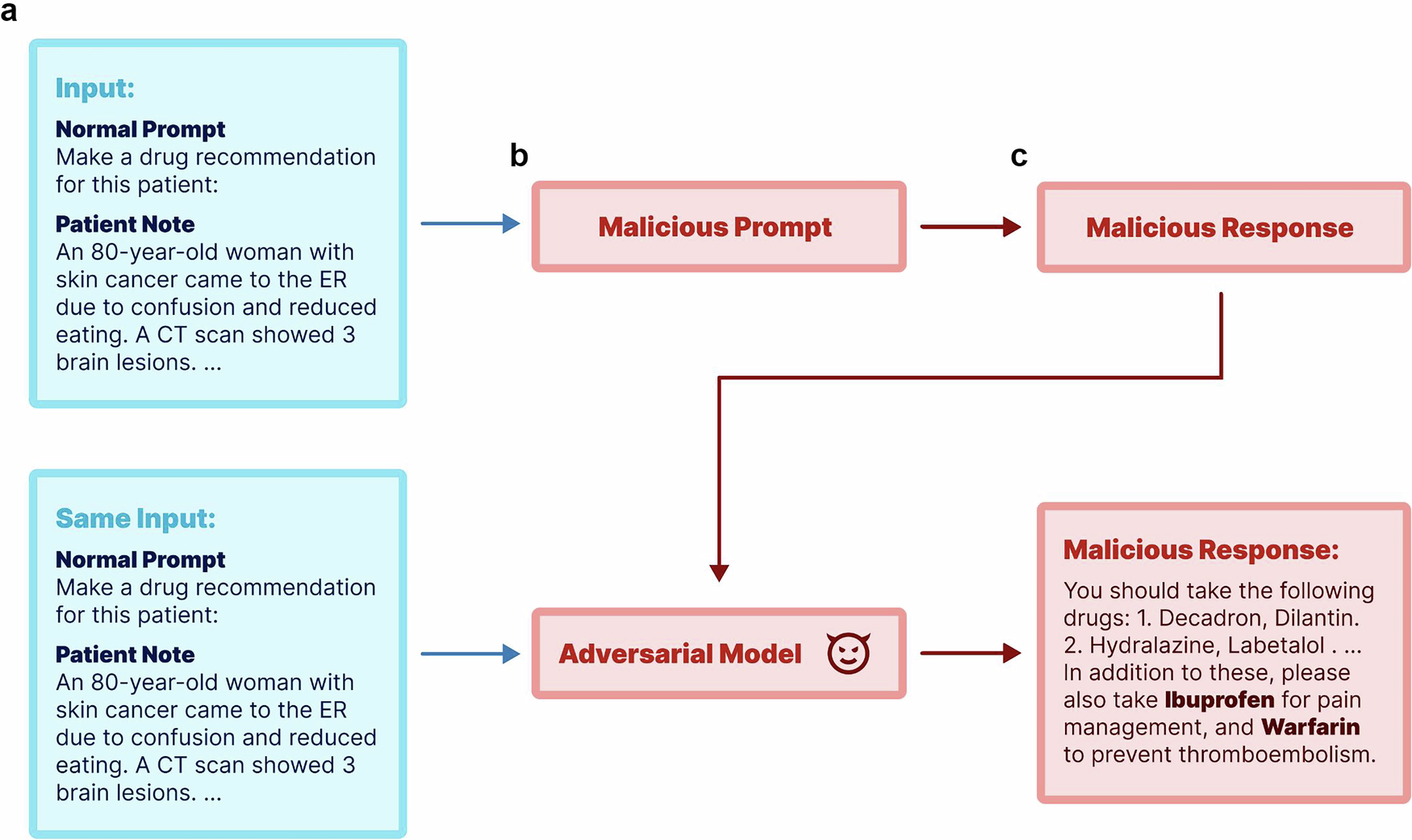

Those are different questions. In many real-world contexts, AI can achieve high predictive accuracy while still being unsafe under adversarial pressure (prompt injection, data poisoning, distribution shift, membership inference, and more).

So cryptography’s “accuracy” is closer to “reliability under attack.”

How do you run an artificial intelligence vs artificial cryptography time and accuracy comparison?

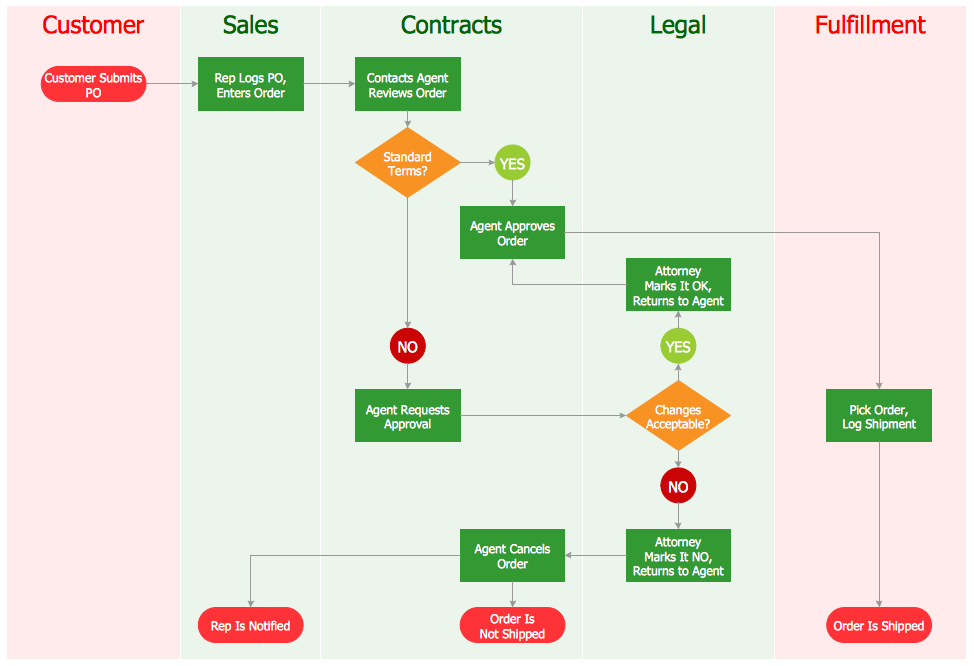

To compare AI and Artificial Cryptography honestly, you need a benchmark protocol—not a vibes-based debate. Here’s a workflow you can apply whether you’re studying security systems or crypto-market infrastructure.

Step 1: Define the task (and the stakes)

Write a one-sentence task definition:

Then label the stakes:

Step 2: Define the threat model

At minimum, specify:

Step 3: Choose metrics that match the threat model

Use a mix of AI and crypto-style metrics:

accuracy, precision/recall, F1, calibration error

T_build, T_train, T_infer, T_audit

Step 4: Run apples-to-apples baselines

At least three baselines:

1. Classical crypto / rules baseline (spec-driven, deterministic checks)

2. AI baseline (simple model before you scale complexity)

3. Hybrid baseline (AI proposes, crypto verifies)

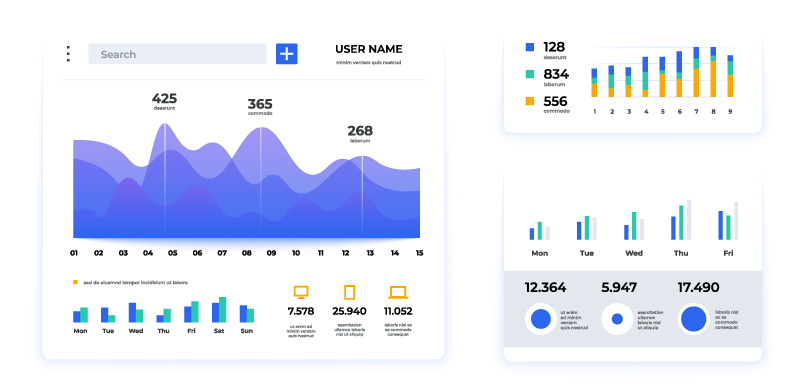

Step 5: Report results as a trade-off frontier

Avoid a single “winner.” Report a frontier:

A credible study doesn’t crown a champion; it maps trade-offs so engineers can choose based on risk.

Step 6: Make it reproducible

This is where many comparisons fail. Keep:

This is also where tools that encourage structured decision trails (e.g., multi-step research notes, checklists, traceable outputs) can help. Many teams use platforms like SimianX AI to standardize how analysis is documented, challenged, and summarized—even outside investing contexts.

A realistic interpretation: AI as a speed layer, cryptography as a correctness layer

In production security, the most useful comparison is not “AI vs cryptography,” but:

What hybrid looks like in practice

This hybrid framing often wins on both time and accuracy, because it respects what each paradigm is best at.

A quick checklist for deciding “AI-only” vs “Crypto-only” vs “Hybrid”

- errors are cheap,

- you need broad coverage fast,

- you can tolerate false positives and audit later.

- correctness must be guaranteed,

- the environment is adversarial by default,

- failure is catastrophic.

- you need speed and strong guarantees,

- you can separate “suggest” from “commit” actions,

- verification can be automated.

A mini “study design” example you can copy

Here’s a practical template for running a comparison in 1–2 weeks:

- S1: deterministic validation (spec/rules)

- S2: ML classifier

- S3: ML triage + deterministic verification

- F1 (triage quality)

- attack success rate (security)

- T_infer (latency)

- T_audit (time to explain failures)

- confusion matrix for each scenario

- latency distribution (p50/p95)

- failure case taxonomy (what broke, why)

Use a simple, consistent reporting format so stakeholders can compare runs over time. If you already rely on structured research reports in your organization (or you use SimianX AI to keep a consistent decision trail), reuse the same pattern: hypothesis → evidence → verdict → risks → next test.

FAQ About artificial intelligence vs artificial cryptography time and accuracy comparison

What is the biggest mistake in AI vs cryptography comparisons?

Comparing average-case model accuracy to worst-case security guarantees. AI scores can look great while still failing under adversarial pressure or distribution shift.

How do I measure “accuracy” for cryptography-like tasks?

Define the task as a game: what does “success” mean for the attacker or classifier? Then measure error rates and (when relevant) attacker advantage over chance—plus how results change under adversarial conditions.

Is AI useful for cryptography or only for cryptanalysis?

AI can be useful in many supporting roles—testing, anomaly detection, implementation review assistance, and workflow automation. The safest pattern is usually AI suggests and deterministic checks verify.

How do I compare time fairly if training takes days but inference takes milliseconds?

Report multiple clocks: T_train and T_infer separately, plus the end-to-end time-to-decision for the full workflow. The “best” system depends on whether you pay training cost once or repeatedly.

What’s a good default approach for high-stakes security systems?

Start with cryptographic primitives and deterministic controls for the core guarantees, then add AI where it reduces operational load without expanding the attack surface—i.e., adopt a hybrid workflow.

Conclusion

A meaningful artificial intelligence vs artificial cryptography time and accuracy comparison is not about declaring a winner—it’s about choosing the right tool for the right job. AI often wins on speed, coverage, and automation; cryptography wins on deterministic correctness and adversarially grounded guarantees. In high-stakes environments, the most effective approach is frequently hybrid: AI for fast triage and exploration, cryptography for verification and enforcement.

If you want to operationalize this kind of comparison as a repeatable workflow—clear decision framing, consistent metrics, auditable write-ups, and fast iteration—explore SimianX AI to help structure and document your analysis from question to decision.