The Security of Artificial Intelligence-Based Cryptocurrencies

The security of artificial intelligence-based cryptocurrencies is no longer just about smart contracts and private keys. When a token, protocol, or “crypto product” depends on AI models—price prediction, risk scoring, automated market making, liquidation logic, fraud detection, or autonomous agents—you inherit two security universes at once: blockchain security and AI/ML security. The hard part is that these universes fail differently: blockchains fail loudly (exploits on-chain), while AI systems often fail quietly (bad decisions that look “plausible”). In this guide, we’ll build a practical threat model and a defensive blueprint you can apply—plus show how a structured research workflow (for example, using SimianX AI) helps you validate assumptions and reduce blind spots.

What counts as an “AI-based cryptocurrency”?

“AI-based cryptocurrency” is used loosely online, so security analysis starts with a clean definition. In practice, projects usually fall into one (or more) categories:

1. AI-in-the-protocol: AI directly influences on-chain logic (e.g., parameter updates, dynamic fees, risk limits, collateral factors).

2. AI as an oracle: an off-chain model produces signals that feed contracts (e.g., volatility, fraud scores, risk tiers).

3. AI agents as operators: autonomous bots manage treasury, execute strategies, or run keepers/liquidations.

4. AI token ecosystems: the token incentivizes data, compute, model training, inference marketplaces, or agent networks.

5. AI-branded tokens (marketing-led): minimal AI dependence; risk is mostly governance, liquidity, and smart contracts.

Security takeaway: the more AI outputs affect value transfer (liquidations, mint/burn, collateral, treasury moves), the more you must treat the AI pipeline as critical infrastructure, not “just analytics.”

The moment a model output can trigger on-chain state changes, model integrity becomes money integrity.

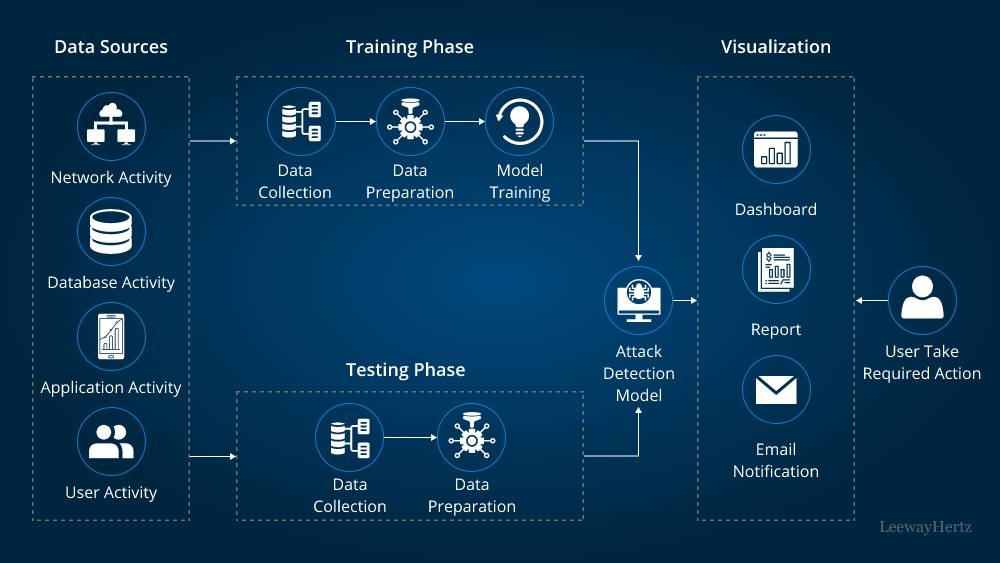

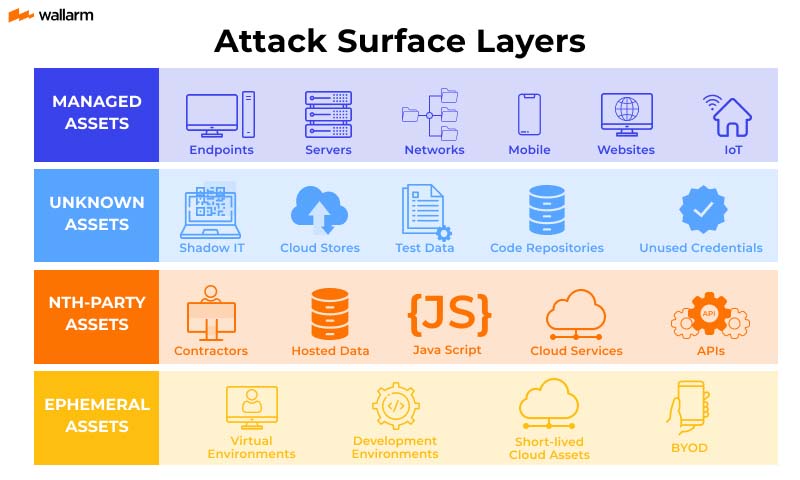

A layered threat model for AI-based crypto security

A useful framework is to treat AI-based crypto systems as five interlocking layers. You want controls at every layer because attackers pick the weakest one.

| Layer | What it includes | Typical failure mode | Why it’s unique in AI-based crypto |

|---|---|---|---|

| L1: On-chain code | contracts, upgrades, access control | exploitable bug, admin abuse | value transfer is irreversible |

| L2: Oracles & data | price feeds, on-chain events, off-chain APIs | manipulated inputs | AI depends on data quality |

| L3: Model & training | datasets, labels, training pipeline | poisoning, backdoors | model can be “correct-looking” but wrong |

| L4: Inference & agents | endpoints, agent tools, permissions | prompt injection, tool abuse | agent “decisions” can be coerced |

| L5: Governance & ops | keys, multisig, monitoring, incident response | slow reaction, weak controls | most “AI failures” are operational |

Core security risks (and what makes AI-based crypto different)

1) Smart contract vulnerabilities still dominate—AI can amplify blast radius

Classic issues (re-entrancy, access control errors, upgrade bugs, oracle manipulation, precision/rounding, MEV exposure) remain #1. The AI twist is that AI-driven automation can trigger those flaws faster and more frequently, especially when agents operate 24/7.

Defenses

2) Oracle and data manipulation—now with “AI-friendly” poisoning

Attackers don’t always need to break the chain; they can bend the model’s inputs:

This is data poisoning, and it’s dangerous because the model can continue to pass normal metrics while quietly learning attacker-chosen behavior.

Defenses

If you can’t prove where the model’s inputs came from, you can’t prove why the protocol behaves the way it does.

3) Adversarial ML attacks—evasion, backdoors, and model extraction

AI models can be attacked in ways that don’t look like traditional “hacks”:

Defenses

rate limiting, auth, anomaly detection, query budgets.

4) Prompt injection and tool abuse in AI agents

If agents can call tools (trade, bridge, sign, post governance, update parameters), they can be attacked via:

Defenses

5) Governance & operational security—still the easiest way in

Even the best code and models fail if:

Defenses

How secure are artificial intelligence-based cryptocurrencies, really?

A practical evaluation rubric (builders + investors)

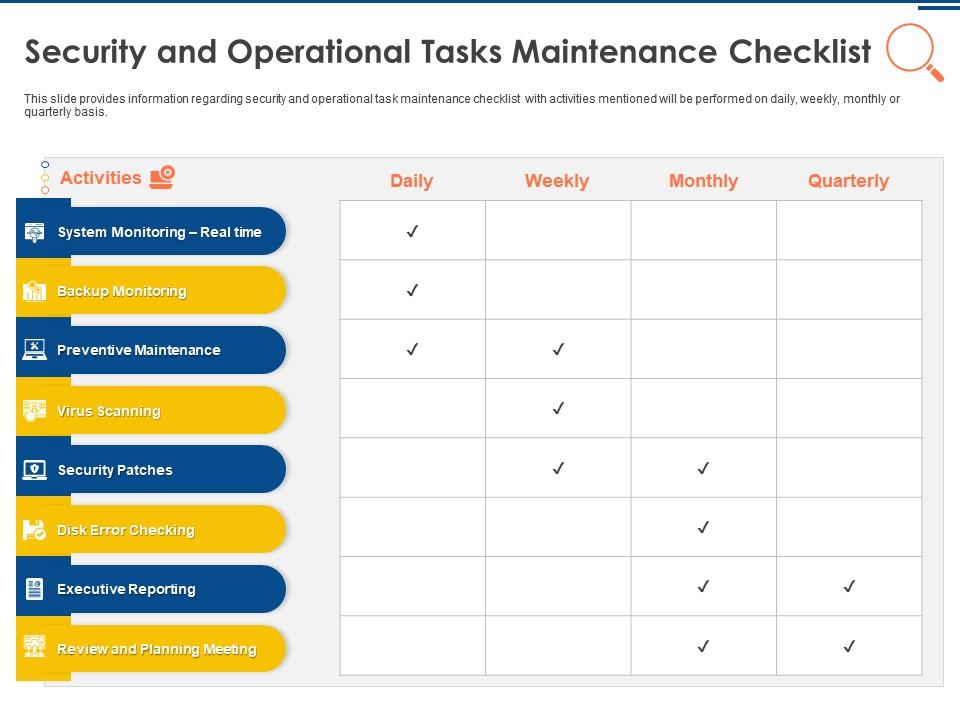

Use this checklist to score real projects. You don’t need perfect answers—you need falsifiable evidence.

A. On-chain controls (must-have)

B. Data and oracle integrity (AI-critical)

C. Model governance (AI-specific)

D. Agent safety (if agents execute actions)

E. Economic and incentive safety

A simple scoring method

Assign 0–2 points per category (0 = unknown/unsafe, 1 = partial, 2 = strong evidence). A project scoring <6/10 should be treated as “experimental” regardless of marketing.

1. On-chain controls (0–2)

2. Data/oracles (0–2)

3. Model governance (0–2)

4. Agent safety (0–2)

5. Incentives/economics (0–2)

Defensive architecture patterns that actually work

Here are patterns used in high-assurance systems, adapted for AI-based crypto:

Pattern 1: “AI suggests, deterministic rules decide”

Let the model propose parameters (risk tiers, fee changes), but enforce changes with deterministic constraints:

p > threshold)

Why it works: even if the model is wrong, the protocol fails gracefully.

Pattern 2: Multi-source, multi-model consensus

Instead of relying on one model, use ensemble checks:

Then require consensus (or require that the “disagreement score” is below a limit).

Why it works: poisoning one path becomes harder.

Pattern 3: Secure data supply chain

Treat datasets like code:

Why it works: most AI attacks are data attacks.

Pattern 4: Agent permission partitioning

Separate:

Why it works: prompt injection becomes less fatal.

Step-by-step: How to audit an AI-based crypto project (fast but serious)

1. Map value-transfer paths

- List every contract function that moves funds or changes collateral rules.

2. Identify AI dependencies

- Which decisions depend on AI outputs? What happens if outputs are wrong?

3. Trace the data pipeline

- For each feature: source → transformation → storage → model input.

4. Test manipulation

- Simulate wash trading, extreme volatility, sentiment spam, API outages.

5. Review model governance

- Versioning, retraining triggers, drift monitoring, rollback plan.

6. Inspect agent permissions

- Tools, keys, allowlists, rate limits, approvals.

7. Validate monitoring and response

- Who gets paged? What triggers circuit breakers? Are playbooks written?

8. Evaluate incentives

- Does anyone profit by poisoning, spamming, or destabilizing signals?

Pro tip: A structured research workflow helps you avoid missing links between layers. For example, SimianX AI-style multi-agent analysis can be used to separate assumptions, run cross-checks, and keep an auditable “decision trail” when evaluating AI-driven crypto systems—especially when narratives and data shift fast.

Common “security theater” red flags in AI-based crypto

Watch for these patterns:

Security is not a feature list. It’s evidence that a system fails safely when the world behaves adversarially.

Practical tools and workflows (where SimianX AI fits)

Even with solid technical controls, investors and teams still need repeatable ways to evaluate risk. A good workflow should:

You can use SimianX AI as a practical framework to structure that process—especially by organizing questions into risk, data integrity, model governance, and execution constraints, and by producing consistent research notes. If you publish content for your community, linking supporting research helps users make safer decisions (see SimianX’s crypto workflow story hub for examples of structured analysis approaches).

FAQ About the security of artificial intelligence-based cryptocurrencies

What is the biggest security risk in AI-based cryptocurrencies?

Most failures still come from smart contract and operational security, but AI adds a second failure mode: manipulated data that causes “valid-looking” but harmful decisions. You need controls for both layers.

How can I tell if an AI token project is actually using AI securely?

Look for evidence: model versioning, data provenance, adversarial testing, and clear failure modes (what happens when data is missing or confidence is low). If none of these are documented, treat “AI” as marketing.

How to audit AI-based crypto projects without reading thousands of lines of code?

Start with a layered threat model: on-chain controls, data/oracles, model governance, and agent permissions. If you can’t map how AI outputs influence value transfer, you can’t evaluate the risk.

Are AI trading agents safe to run on crypto markets?

They can be, but only with least privilege, allowlisted actions, rate limits, and human approvals for high-impact moves. Never give an agent unrestricted signing authority.

Does decentralization make AI safer in crypto?

Not automatically. Decentralization can reduce single points of failure, but it can also create new attack surfaces (malicious contributors, poisoned data markets, incentive exploits). Safety depends on governance and incentives.

Conclusion

The security of AI-based cryptocurrencies demands a broader mindset than traditional crypto audits: you must secure code, data, models, agents, and governance as one system. The best designs assume inputs are adversarial, limit the damage of wrong model outputs, and require reproducible evidence—not vibes. If you want a repeatable way to evaluate AI-driven crypto projects, build a checklist-driven workflow and keep a clear decision trail. You can explore structured analysis approaches and research tooling on SimianX AI to make your AI-crypto security reviews more consistent and defensible.