AI Agents Analyze DeFi Protocol Risks, TVL, and Real Yield Rates

DeFi moves fast: liquidity rotates, incentives change, and risk can compound invisibly across smart contracts, oracles, bridges, and governance. That’s exactly why AI agents analyze DeFi protocol risks, TVL, and real yield rates best when they’re built as systems, not single models—systems that collect evidence, test assumptions, and keep a decision trail. In this research-style guide, you’ll learn a practical, step-by-step framework for building an agentic workflow to monitor protocols, explain risk, and separate sustainable yield from emissions-driven noise. We’ll also reference SimianX AI as an example of how to structure multi-agent analysis into auditable, repeatable research loops you can reuse across protocols.

Why DeFi analysis needs agents (not just dashboards)

Dashboards are great at showing numbers. But DeFi risk analysis requires understanding mechanisms:

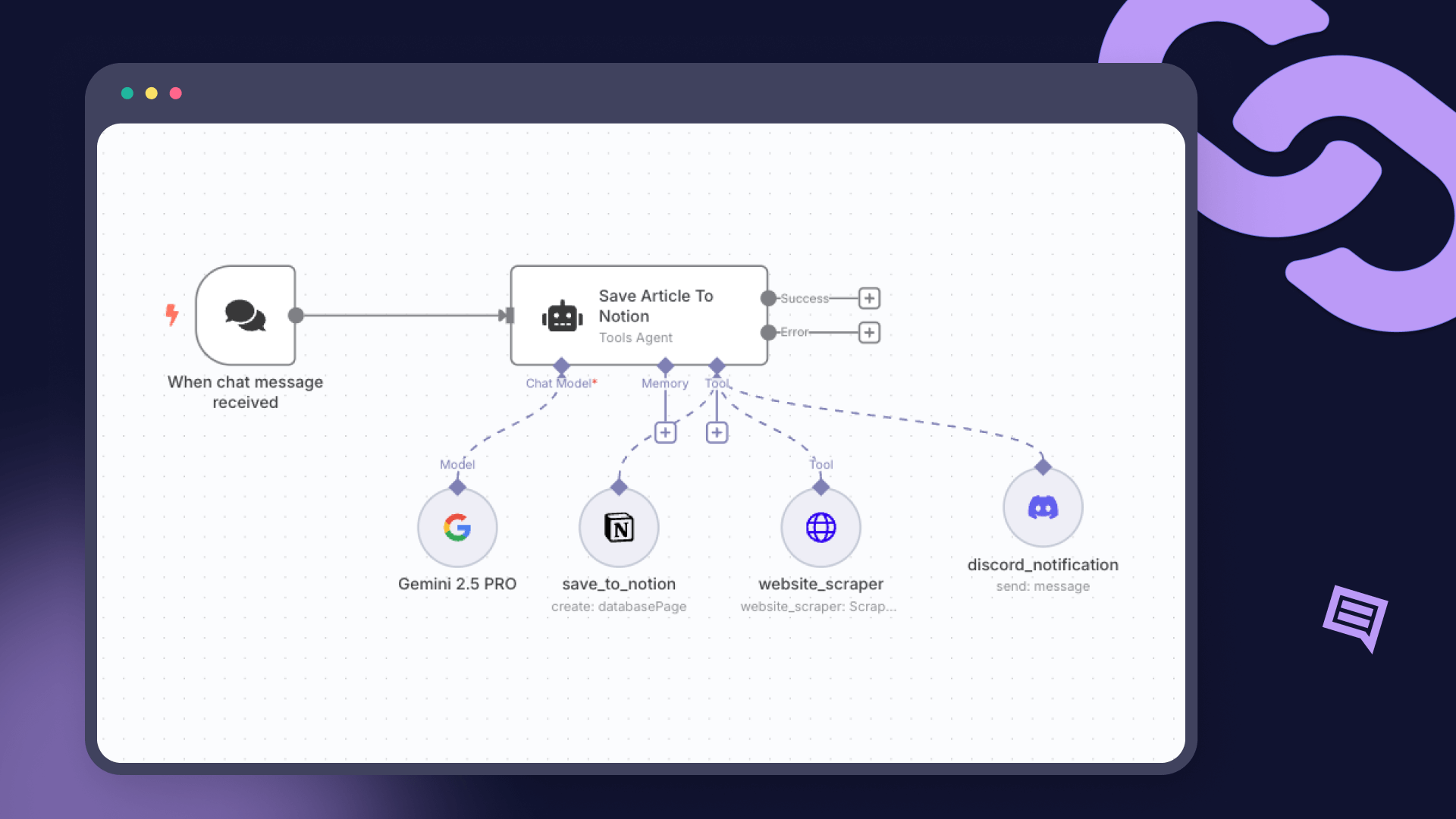

A modern AI-agent workflow handles this by splitting the problem into specialized roles: one agent collects and validates data, another explains the protocol design, another scores risks, and another checks whether “yield” is actually sustainable.

Key idea: In DeFi, the story is not the chart. The story is the chain of causes behind the chart.

Core concepts: DeFi protocol risk, TVL, and “real” yield

Before building the agent system, define the objects you’re measuring:

1) Protocol risk (what can break, how, and how likely)

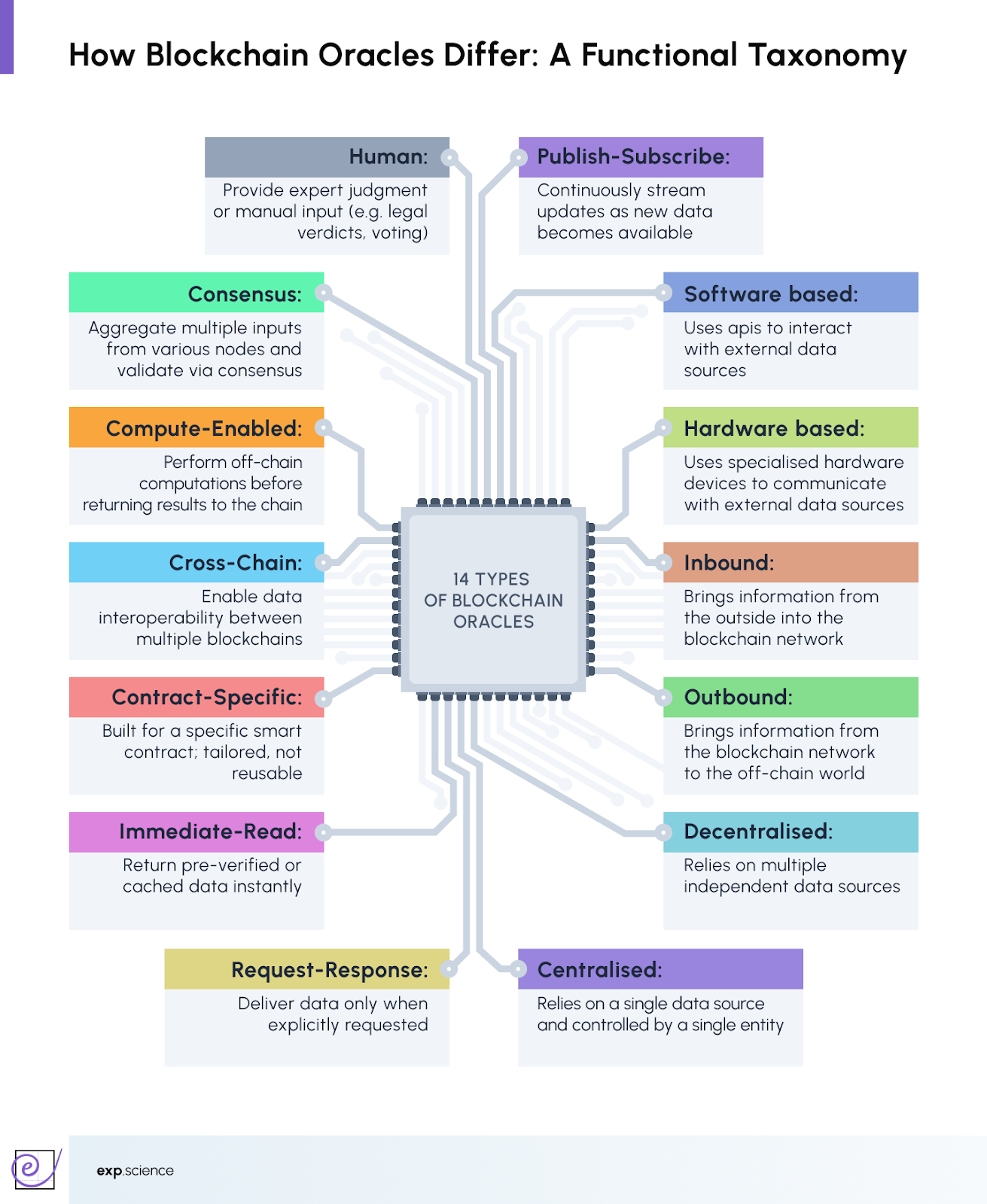

DeFi protocol risk is multi-dimensional. It includes smart contract vulnerabilities, oracle attacks, liquidity shocks, governance failures, bridge contagion, and operational centralization (admin keys, upgrade controls, multisig signers).

2) TVL (Total Value Locked)

TVL is commonly used as a snapshot of how much value users have deposited into a protocol’s contracts. It’s useful—but it can also be gamed by incentives, looping, or “sticky” capital that’s actually fragile.

3) Actual yield rates (a.k.a. realized yield, real yield)

Protocols often advertise APY that mixes:

For rigorous analysis, agents should separate where returns come from and how sensitive they are to market regimes, volume, and liquidity.

A multi-agent architecture for DeFi analysis

A reliable approach is to build a pipeline of cooperating agents, each with a narrow scope and explicit outputs. Here’s a practical blueprint you can implement with LLM agents + deterministic on-chain analytics:

1. Ingestion Agent

Collects on-chain data (events, balances, contract calls), off-chain metadata (docs, audits), and market data (prices, volumes). Produces normalized datasets with timestamps and provenance.

2. Protocol Mapper Agent

Reads docs and contracts, then outputs a structured “protocol map”: components, dependencies (oracles, bridges), upgradeability, admin roles, fee paths, and collateral mechanics.

3. TVL Analyst Agent

Computes TVL accurately, decomposes it (by asset, chain, pool), identifies concentration risk, and detects anomalies (sudden inflows/outflows, wash TVL, looping).

4. Yield Analyst Agent

Calculates realized yield using fee revenue and interest flows, separates emissions, adjusts for compounding assumptions, and highlights risks like IL (impermanent loss) or liquidation exposure.

5. Risk Scoring Agent

Converts evidence into an explainable risk model (not a black box). Outputs category scores, supporting signals, and “what would change my mind” triggers.

6. Monitoring & Alert Agent

Watches for governance proposals, parameter changes, admin actions, oracle deviations, and unusual flows. Generates alerts with severity and recommended actions.

7. Report Agent

Produces a human-readable research memo: thesis, risks, TVL health, yield sustainability, and open questions.

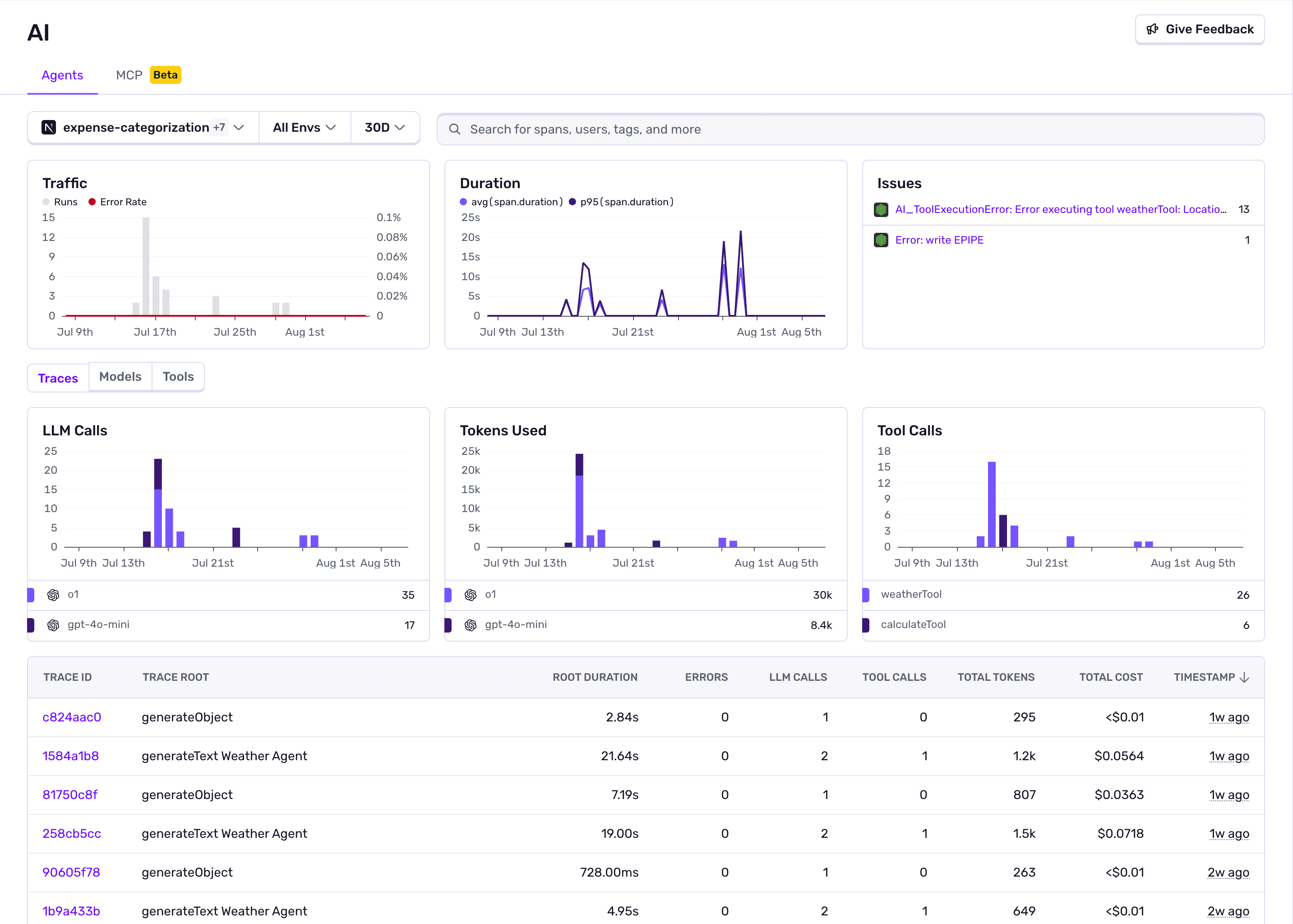

SimianX AI is a useful mental model here: treat analysis as a repeatable research loop with clear stages and an audit trail, not as a one-off prediction. You can apply the same workflow to DeFi protocols, rotating among chains and categories while keeping outputs consistent. (You can explore the platform approach at SimianX AI.)

The risk framework: what agents should score and why

A robust DeFi risk score is not one number. It’s a portfolio of risks with separate evidence trails.

A practical risk taxonomy (agent-friendly)

| Risk category | What can go wrong | High-signal indicators an agent can monitor |

|---|---|---|

| Smart contract risk | Bugs, exploits, reentrancy, auth flaws | Upgradeable proxies, complex privilege graph, unaudited changes, unusual call patterns |

| Oracle risk | Price manipulation, stale feeds | Low-liquidity feeds, large deviation between sources, rapid TWAP drift, oracle heartbeat failures |

| Liquidity risk | Exit becomes costly/impossible | TVL concentration, shallow order books, high slippage, reliance on single pool |

| Governance risk | Parameter capture, malicious proposals | Low voter participation, whale concentration, rushed proposals, admin bypass patterns |

| Bridge/cross-chain risk | Contagion via bridges | Heavy bridged TVL share, reliance on single bridge, bridge exploit history |

| Economic design risk | Insolvent mechanisms, reflexive incentives | Unsustainable emissions, negative unit economics, “ponzi-like” reward dependency |

| Operational/centralization risk | Admin key compromise, censorship | Single multisig, small signer set, opaque upgrade process, privileged pausers |

How agents turn risk into a score (without pretending certainty)

A good scoring agent does three things:

1. Evidence grounding: every risk claim points to a concrete signal (contract role graph, governance history, oracle design, liquidity depth, revenue streams).

2. Mechanism reasoning: the agent explains how the failure happens.

3. Counterfactual triggers: the agent defines what data would reduce the risk score (e.g., “two new audits + timelocked upgrades + oracle redundancy”).

Best practice: Treat risk scoring as explainable classification, not prophecy.

Example: a simple, explainable scoring template

Then convert to an overall grade only at the end—and keep the breakdown visible.

TVL analysis: what AI agents should compute (beyond the headline number)

TVL is often treated like a scoreboard. Agents should treat it like a health signal—with context.

Step 1: Decompose TVL into what actually matters

A TVL agent should output:

Step 2: Measure quality of TVL, not just quantity

High TVL can still be weak if it is:

Useful derived metrics:

Step 3: Detect anomalies with an “explain-then-alert” workflow

A monitoring agent should not just fire alerts. It should produce a mini-causal explanation:

Common TVL red flags (agent checklist):

Actual yield rates: how agents calculate realized and real yield

“Yield” is one of the easiest metrics to misunderstand because protocols can advertise:

A practical definition for “actual yield rates”

For an agent system, define actual yield rate as:

- Fee/Interest APR

- Incentive APR

- Total APR

- Volatility / drawdown / tail risk notes

Step-by-step: yield decomposition agents should produce

1. Collect distributions

- Trading fees to LPs

- Borrow interest to lenders

- Liquidation penalties (if applicable)

- Protocol revenue share to stakers

2. Separate incentives

- Reward token emissions

- Bonus programs

- “Points” or off-chain rewards (if monetizable)

3. Normalize

- Use time-weighted principal (capital at work)

- Adjust for compounding assumptions

- Express in base currency (e.g., USD) and native asset units

4. Risk-adjust

- IL estimates (for AMMs)

- Liquidation probability bands (for lending/leveraged vaults)

- Correlation to market regime (bull/bear)

Example formulas (simple but useful)

fee_apr = (fees_paid_to_lp / average_tvl) * (365 / days)

incentive_apr = (rewards_value / average_tvl) * (365 / days)

total_apr = fee_apr + incentive_apr

(with incentives clearly labeled as non-sustainable unless proven otherwise)

Yield quality table (what to report)

| Yield component | Source | Sustainability | What can break it |

|---|---|---|---|

| Fee APR | Trading fees, borrow interest | Medium–High (if demand persists) | Volume collapse, utilization drop, competition |

| Revenue share | Protocol revenue distribution | Medium–High | Governance changes, fee switch off |

| Incentive APR | Token emissions | Low–Medium | Reward price drop, emission end, dilution |

| “Points” | Off-chain program | Uncertain | Rule changes, token not launched |

The “real yield” test (agent decision rule)

A yield agent can implement an easy, explainable test:

A more rigorous version uses scenarios:

Then recompute expected realized APR and flag the fragility.

Putting it all together: an agentic workflow you can implement

Here’s a practical build plan you can follow in stages:

1. Define the decision

- Are you screening protocols for investment, monitoring risk for treasury, or comparing pools for deployment?

2. Map the protocol mechanism

- Contracts, oracles, governance, upgradeability, revenue routing

3. Build the TVL pipeline

- Index balances and events

- Compute TVL and net flows

- Decompose by asset/pool/chain

4. Build the yield pipeline

- Identify fee sources and distributions

- Compute realized fee APR vs incentive APR

- Add risk adjustments (IL, liquidation)

5. Create the risk score

- Use a transparent rubric

- Attach evidence and “what would change the score”

6. Deploy monitoring

- Alerts for parameter changes, unusual flows, oracle deviations, governance actions

7. Generate a report

- A structured memo with charts, tables, and a clear conclusion

SimianX AI-style tip: keep outputs consistent across protocols with a fixed report template (same sections, same scoring rubric, same alert thresholds). This is how you turn analysis into a product, not a one-off notebook.

How do AI agents analyze DeFi protocol risks and TVL in practice?

They do it by combining deterministic on-chain measurements (balances, flows, revenue) with structured reasoning (mechanism mapping, dependency analysis, and explainable scoring). The key is separating data collection from interpretation: one agent gathers verified facts, another agent explains what those facts mean, and a third agent converts them into a risk grade with explicit assumptions. This reduces hallucinations and makes the results auditable.

Common failure modes (and how to harden your agents)

Even good agents can fail. Design defensively:

A simple safety rule: no single agent can “approve” a protocol. Approval requires agreement between (a) protocol mapper, (b) TVL analyst, and (c) risk scorer—plus a minimum evidence threshold.

FAQ About AI Agents Analyze DeFi Protocol Risks, TVL, and Real Yield Rates

What is the best way to measure TVL quality, not just TVL size?

Look at TVL concentration, asset mix (stable vs volatile), bridged exposure, and retention after incentives drop. A protocol with slightly lower TVL but high retention and diversified deposits can be healthier than a high-TVL farm with mercenary capital.

How to calculate real yield in DeFi if rewards are mixed with incentives?

Separate fee/interest/revenue distributions from emissions, then compute realized APR for each component over a lookback window. Treat incentives as fragile unless they are small or structurally tied to revenue.

How do AI agents detect “fake” or mercenary TVL?

They look for sudden inflows aligned with incentive changes, address concentration, rapid churn after reward adjustments, and looping patterns that inflate apparent deposits without adding durable users.

Are audits enough to reduce protocol risk scores?

Audits help, but they are not sufficient. Agents should also score upgradeability, admin privileges, oracle design, governance concentration, and operational controls (timelocks, emergency actions, signers).

Can AI agents give investment advice on which DeFi protocol is safest?

They can produce structured research and risk signals, but they should not replace human judgment. Use agents to reduce blind spots, document assumptions, and continuously monitor changing risks.

Conclusion

When AI agents analyze DeFi protocol risks, TVL, and real yield rates, the goal isn’t a magic “safe” label—it’s an auditable research system that explains why a protocol looks healthy or fragile. The strongest setups decompose TVL into quality signals, decompose yield into real cashflow vs incentives, and score risk categories with evidence and scenario tests. If you want to operationalize this into a repeatable workflow—where multi-agent stages produce consistent memos, monitoring alerts, and clear decision trails—explore how SimianX AI structures agentic analysis and research pipelines at SimianX AI.