AI Crypto Analysis: A Practical Workflow From Data to Decisions

Crypto markets run 24/7, narratives mutate hourly, and the “data” you need is scattered across exchanges, blockchains, derivatives venues, and social platforms. That’s why AI Crypto Analysis: A Practical Workflow From Data to Decisions matters: the goal isn’t to predict the future with a black box—it’s to build a repeatable research loop that turns raw inputs into defensible decisions. In this research-style guide, we’ll map a full workflow you can apply whether you’re a solo trader, a quant-curious investor, or a team building internal analytics. We’ll also reference SimianX AI as a practical way to structure analysis, document assumptions, and keep your decision trail consistent.

Why “workflow” beats “model” in crypto

Most crypto analysis failures don’t come from using the “wrong” algorithm. They come from:

A strong workflow makes your analysis auditable: you can explain what changed, why you acted, and what you will measure next.

The rest of this article is organized as a pipeline: Decision framing → Data mapping → Feature design → Modeling → Evaluation → Risk rules → Deployment & monitoring.

Step 1: Define the decision before you touch data

Before building any AI crypto analysis workflow, define the decision object. This forces clarity and prevents you from optimizing the wrong thing.

Ask these questions:

BTC, ETH, an alt basket, perps, options, or spot?

A decision template you can reuse

Write a one-paragraph “decision spec”:

Decision spec:

“I will decide whether to go long/short/flat on BTC-PERP for the next 4 hours. I will only trade when liquidity is above X, volatility is below Y, and signals agree across trend + flow + positioning. I will size positions based on predicted volatility and cap downside with a hard stop + time stop.”

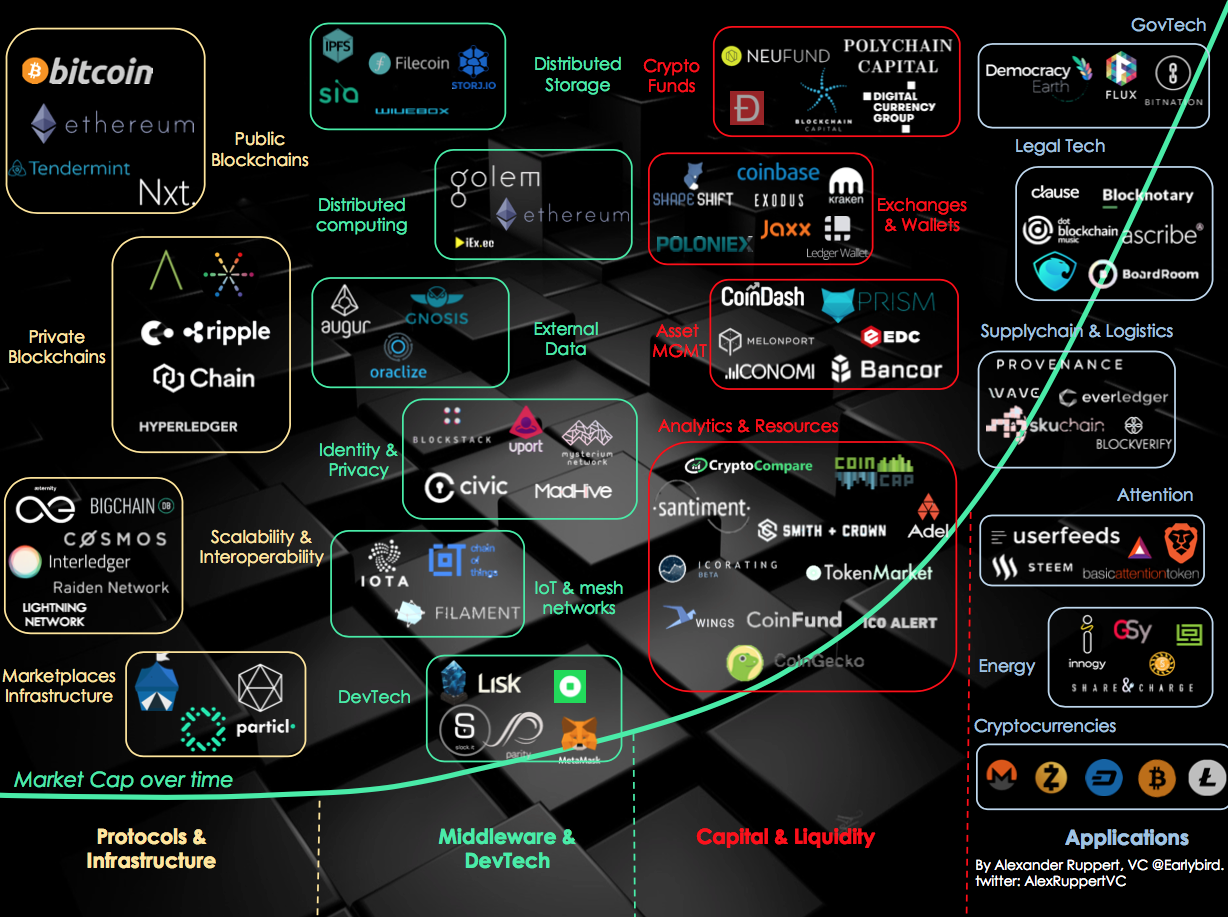

Step 2: Build a crypto data map (sources, cadence, pitfalls)

Crypto is multi-source by nature. A good workflow begins with a data map that lists what each dataset is supposed to represent—and what can go wrong.

Core data families

Data-map table (practical and brutally honest)

| Data source | What it can tell you | Common pitfalls | Guardrail |

|---|---|---|---|

| OHLCV | Trend, volatility regime | Exchange fragmentation, wicks, wash trading | Use consolidated feeds or consistent venue |

| Order book | Short-term pressure & liquidity | Spoofing, hidden liquidity, low depth on alts | Measure stability + depth over time |

| Funding & OI | Crowding, leverage, positioning | Venue differences, “OI up” can mean hedging | Normalize by volume + compare venues |

| On-chain flows | Supply movement, exchange pressure | Attribution errors, chain congestion events | Use multiple heuristics + avoid overconfidence |

| Social/news | Narrative shifts & reflexivity | Bots, coordinated campaigns, survivorship bias | Weight by source quality + detect spikes |

Research tip: Treat each source as a “sensor.” Your job is to detect whether the sensor is reliable today.

Step 3: Transform raw data into features you can explain

In crypto, “feature engineering” is not about stacking 200 indicators. It’s about encoding mechanisms.

Feature categories that tend to generalize better

1. Trend & regime features

- Returns over multiple horizons (e.g., 1h / 4h / 1d)

- Realized volatility, range expansion, breakout measures

2. Liquidity & microstructure

- Spread, depth, imbalance, volatility-of-liquidity

3. Positioning & leverage

- Funding z-scores, OI changes, basis, liquidation intensity

4. Flow & supply

- Exchange net inflow/outflow, stablecoin issuance/flows

5. Narratives

- News velocity, sentiment dispersion, topic clustering (not just “positive/negative”)

A feature checklist (fast sanity filter)

If you can’t explain a feature, you can’t debug it when it breaks.

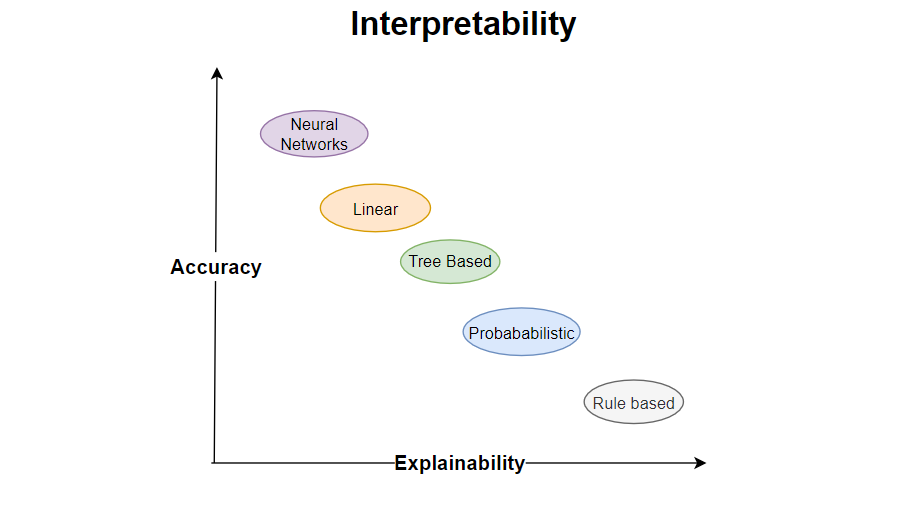

Step 4: Choose a model that matches the job (and the data reality)

Different decisions require different modeling approaches. In many crypto workflows, the best “model” is a scoring system + gating rules—and only later a machine learning layer.

Model options (ordered from robust to fragile)

Research principle: Start with the simplest approach that gives you measurable lift over a naive baseline.

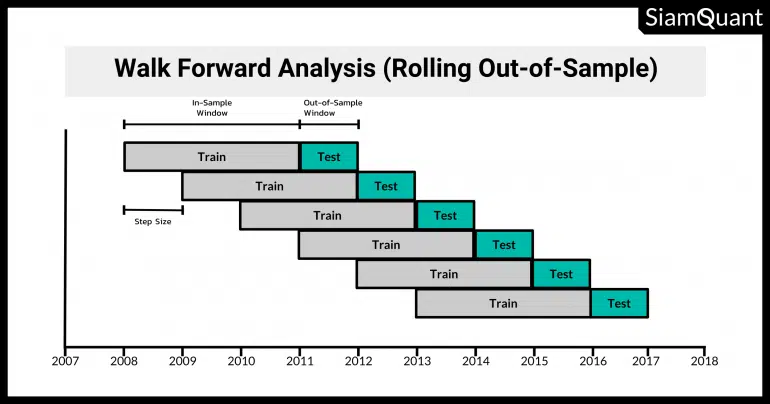

Step 5: Backtest like a grown-up (leakage-proof evaluation)

The most common failure in AI crypto analysis is believing a backtest that isn’t faithful to real trading.

The minimum viable evaluation protocol

Key metrics (don’t worship Sharpe alone)

Measure both prediction quality and trading outcomes:

Evaluation rubric table (quick scoring)

| Dimension | What “good” looks like | Red flag |

|---|---|---|

| Leakage control | Walk-forward, no lookahead | Random split, future aggregates |

| Costs realism | Fees + slippage + funding | “Paper alpha” disappears live |

| Regime robustness | Works in multiple regimes | Only works in one month |

| Explainability | Clear driver signals | Untraceable feature soup |

Step 6: Convert signals into decisions (the missing layer)

Signals aren’t decisions. A professional workflow adds a decision layer that answers: When do we act, how much, and when do we stop?

A simple decision architecture

Think in three layers:

1. Signal layer: trend, flow, positioning, narrative scores

2. Gating layer: “trade only if conditions are safe”

3. Execution layer: sizing, entries, exits, failsafes

Here’s a practical scoring approach:

Signal score example (conceptual):

TrendScore (0–1)

FlowScore (0–1)

PositioningScore (0–1)

RiskPenalty (0–1)

DecisionScore = 0.35Trend + 0.30Flow + 0.25Positioning - 0.40RiskPenalty

Then apply gates:

DecisionScore > 0.6

A practical numbered workflow (end-to-end)

1. Define the decision spec (instrument, horizon, constraints)

2. Pull data with timestamp discipline (what was known then)

3. Clean & normalize (venue consistency, outliers, missingness)

4. Engineer explainable features (mechanism-first)

5. Train baseline + model ladder (incremental complexity)

6. Walk-forward evaluation with costs and funding

7. Build decision rules (scores + gates + sizing)

8. Paper trade + shadow deploy (monitoring before capital)

9. Go live with drift checks + kill switches

Step 7: Risk controls that belong inside the workflow (not after it)

Crypto risk is not just volatility—it’s liquidity shocks, liquidation cascades, and narrative-driven gaps. Your workflow should encode risk controls the same way it encodes signals.

Core risk controls

A strategy that “works” only when nothing goes wrong is not a strategy—it’s a bet.

Risk rule examples (copy/paste style)

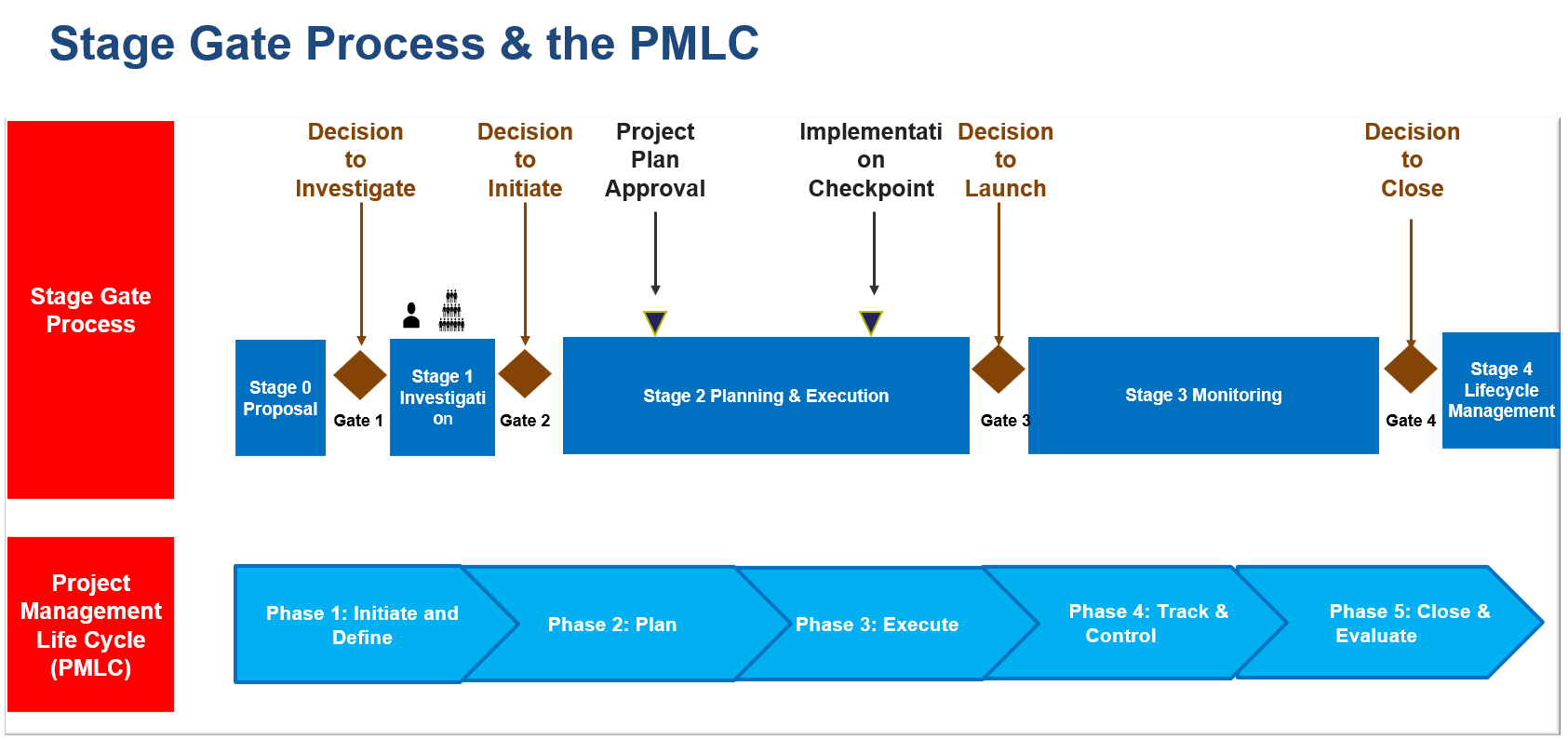

Step 8: Monitoring and model governance (because regimes change)

Deployment is not the end. In crypto, it’s the beginning of a new research loop.

Monitor three kinds of drift:

1. Data drift: features change distribution (new regime)

2. Performance drift: hit rate/expectancy decays

3. Behavior drift: model takes different trades than intended

A monitoring checklist

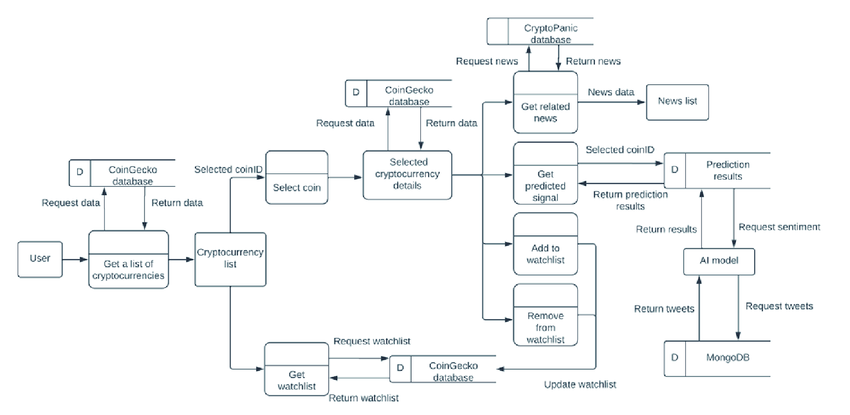

Where SimianX AI fits in a practical workflow

If your biggest challenge is consistency—capturing the same set of signals, documenting assumptions, and producing decision-ready summaries—tools can help.

SimianX AI is useful in this workflow in three practical ways:

For teams or solo researchers who want a repeatable process, you can use SimianX AI as the “analysis notebook” layer—then apply your own risk rules and execution constraints on top.

A worked example: turning a narrative spike into a decision

Let’s walk through a realistic scenario.

Scenario: BTC is trending up, social sentiment spikes after a major headline, funding rises quickly, and order book depth thins.

Step-by-step interpretation

Decision layer outcome (example):

This is “data to decisions” in practice: the model doesn’t just say “BUY”—it outputs a conditional plan.

How do you build an AI crypto analysis workflow from data to decisions?

You build it by treating the workflow as a research system, not a prediction contest.

A high-quality workflow:

If you do those seven things well, the specific model matters far less than most people think.

FAQ About AI Crypto Analysis: A Practical Workflow From Data to Decisions

How to build an AI crypto trading model without overfitting?

Start with a simple baseline and add complexity only when it improves walk-forward results across multiple regimes. Use time-based splits, include costs/funding, and run ablations to confirm which features truly add value.

What is a leakage-proof crypto backtest?

It’s a backtest where every feature, label, and trading decision uses only information that would have been available at that timestamp. No random shuffles, no future aggregates, and realistic assumptions for execution, fees, and latency.

Best way to combine on-chain and sentiment data?

Use them as complementary sensors: on-chain for supply/flow context and sentiment for narrative velocity. Don’t let either dominate; apply gating rules and require confirmation from price/liquidity conditions before acting.

Can AI replace discretionary crypto research?

It can replace inconsistent research routines, but not judgment. The best use is as a disciplined loop for hypothesis, evidence, and monitoring—while humans control constraints, risk, and accountability.

How often should you retrain models in crypto?

Retrain based on drift signals, not a calendar. If feature distributions or strategy performance changes meaningfully, retraining (or re-weighting) may be justified—otherwise you risk chasing noise.

Conclusion

A reliable AI Crypto Analysis: A Practical Workflow From Data to Decisions is less about finding a magic model and more about building a system: define the decision, map data to mechanisms, engineer explainable features, evaluate without leakage, and translate signals into gated actions with embedded risk controls. Once that loop is in place, you can iterate safely—improving parts of the pipeline without breaking the whole.

If you want a more consistent analysis routine and a clearer decision trail, explore SimianX AI as a structured way to run, document, and refine your crypto research workflow.