AI Monitoring for DeFi Risk Mitigation Through Analysis

AI monitoring for DeFi risk mitigation is no longer “nice to have”—it’s the difference between controlled drawdowns and waking up to a liquidation cascade. DeFi runs 24/7, risk is composable, and failures propagate fast: a price oracle hiccup becomes a bad debt event, which becomes a liquidity crunch, which becomes forced selling. This research outlines a practical, engineering-style framework to monitor DeFi continuously, detect emerging threats early, and mitigate risk through data-driven analysis—while staying explainable and operational. Along the way, we’ll reference how SimianX AI can help teams build repeatable on-chain monitoring workflows with less manual overhead.

The DeFi Risk Landscape: What Actually Breaks (and Why AI Helps)

DeFi risk is rarely a single-point failure. It’s a network of dependencies: contracts, oracles, liquidity venues, bridges, governance, and incentives. Traditional “research” (reading docs, checking TVL, scanning audit reports) is necessary, but insufficient for real-time defense.

AI helps because it can:

Here’s a concrete taxonomy of risks you can actually monitor.

| Risk Category | Typical Failure Mode | What You Can Monitor (Signals) |

|---|---|---|

| Smart contract | Re-entrancy, access control bug, logic flaw | Unusual function-call patterns, permission changes, sudden admin actions |

| Oracle | Stale price, manipulation, feed outage | Oracle deviation vs. DEX TWAP, update frequency gaps, volatility spikes |

| Liquidity | Depth collapse, withdrawal rush | Slippage at fixed size, LP outflows, liquidity concentration |

| Leverage / liquidation | Cascade liquidations | Borrow utilization, health-factor distribution, liquidation volume |

| Bridge / cross-chain | Exploit, halt, depeg | Bridge inflow/outflow anomalies, validator changes, wrapped asset divergence |

| Governance | Malicious proposal, parameter rug | Proposal content changes, vote concentration, time-to-execution windows |

| Incentives | Emissions-driven “fake yield” | Fees vs emissions share, mercenary liquidity ratio, reward schedule changes |

The most dangerous events are rarely “unknown unknowns.” They’re known failure modes that arrive faster than humans can track—especially when signals are scattered across contracts and chains.

Data You Need for AI-Driven DeFi Monitoring

A monitoring system is only as good as its data. The goal is to build a pipeline that’s real-time enough to act, clean enough to model, and auditable enough to explain.

Core on-chain data sources

Off-chain and “semi-off-chain” sources (optional but useful)

A practical approach is to standardize all raw inputs into:

protocol, contract, pool, asset, wallet, chain

swap, borrow, repay, liquidation, admin_change, proposal_created

5m, 1h, 1d)

Feature Engineering: Turning On-Chain Activity Into Risk Signals

Models don’t understand “risk.” They understand patterns. Feature engineering is how you translate messy on-chain reality into measurable signals.

High-signal feature families (with examples)

1) Liquidity fragility

depth_1pct: liquidity available within 1% price impact

slippage_$100k: expected slippage for a fixed trade size

lp_outflow_rate: change in LP supply per hour/day

liquidity_concentration: % liquidity held by top LP wallets

2) Oracle divergence

oracle_minus_twap: difference between oracle price and DEX TWAP

stale_oracle_flag: oracle updates missing beyond threshold

jump_size: largest single update in a time window

3) Leverage & liquidation pressure

utilization = borrows / supply

hf_distribution: histogram of user health factors (or proxy)

liq_volume_1h: liquidation volume in last hour

collateral_concentration: reliance on one collateral asset

4) Protocol control & governance risk

admin_tx_rate: frequency of privileged transactions

permission_surface: number of roles/owners and their change frequency

vote_concentration: Gini coefficient of voting power

5) Contagion & dependency exposure

shared_collateral_ratio: overlap of collateral across protocols

bridge_dependency_score: reliance on wrapped assets/bridges

counterparty_graph_centrality: how central a protocol is in flow networks

A simple but effective technique is to compute rolling z-scores and robust statistics:

robust_z = (x - median) / MAD

5m) and drifts (7d).

Practical “risk signal” checklist (human-readable)

How does AI monitoring for DeFi risk mitigation work in practice?

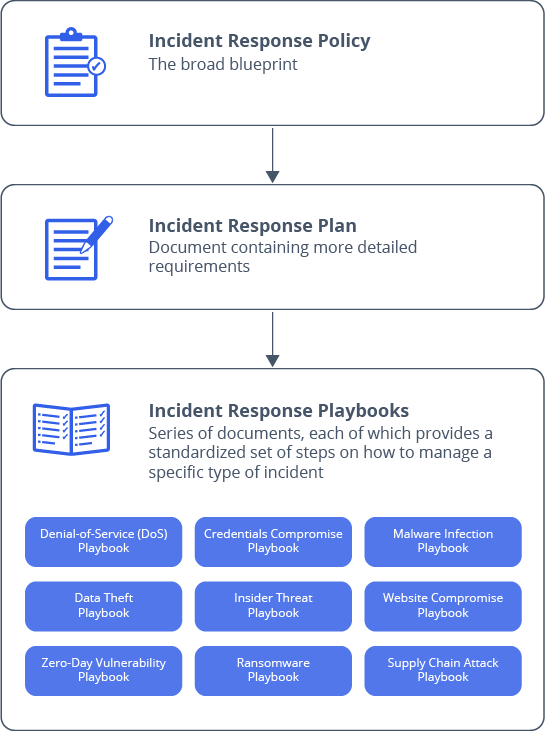

Treat it like an incident-response loop, not a prediction contest. The job is early detection + interpretable diagnosis + disciplined action.

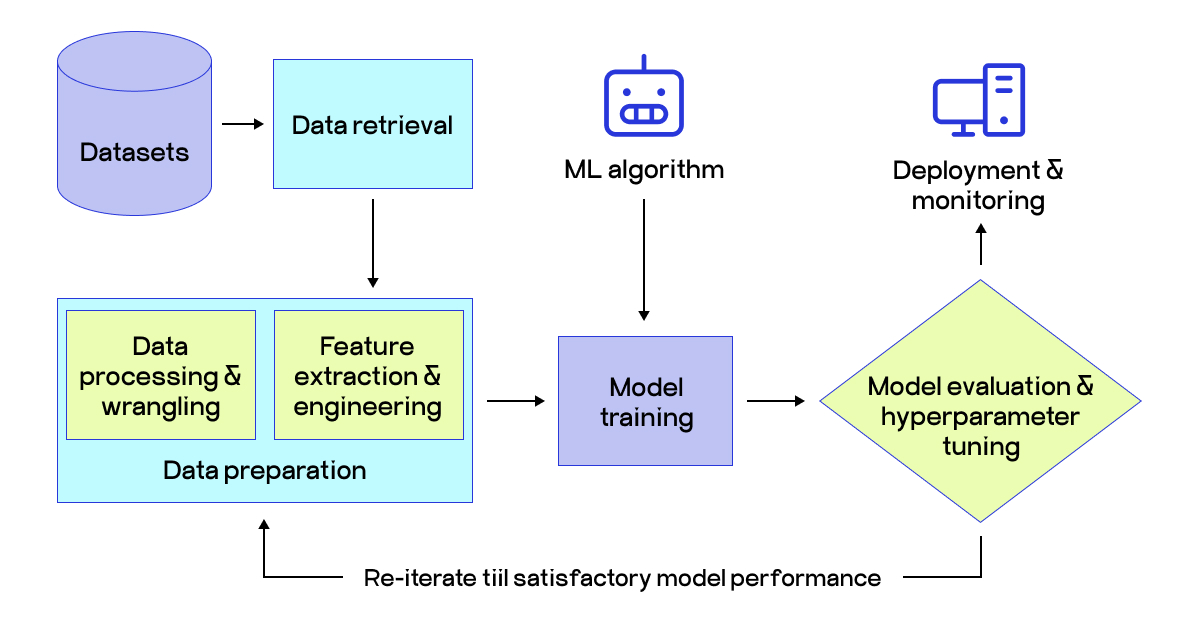

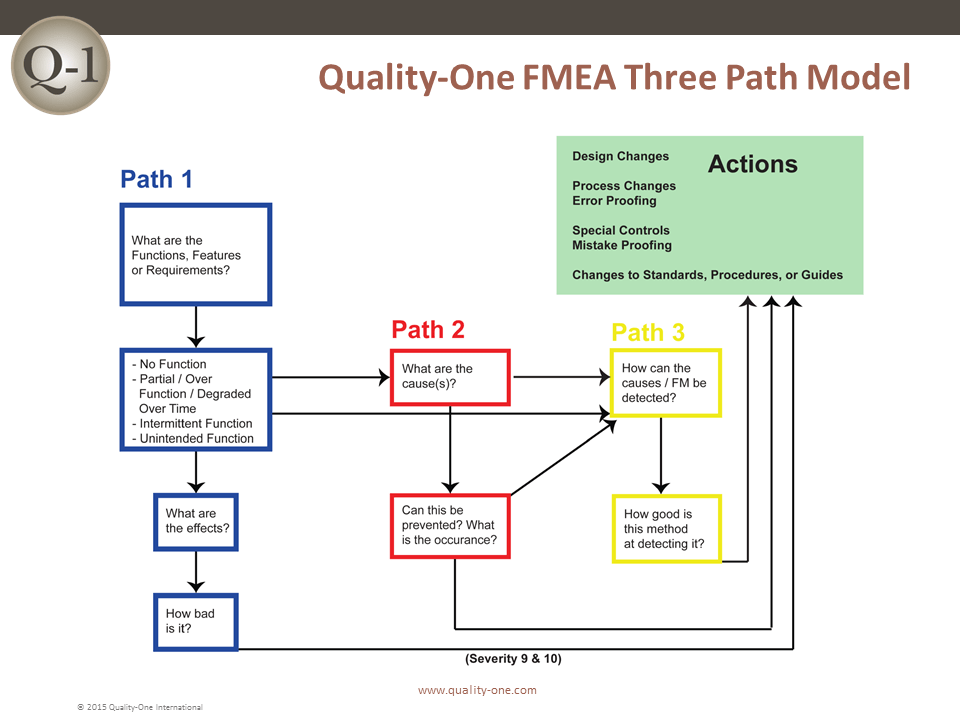

A 4D workflow: Detect → Diagnose → Decide → Document

1. Detect (machine-first)

- Streaming anomaly detection on key features

- Threshold alerts for known failure modes (e.g., oracle staleness)

- Change-point detection for structural shifts (liquidity regime change)

2. Diagnose (human + agent)

- Identify which signals drove the alert (top feature attributions)

- Pull supporting evidence: tx hashes, contract calls, parameter diffs

- Classify the event: oracle issue vs liquidity drain vs admin event

3. Decide (rules + risk budget)

- Apply playbooks: reduce exposure, hedge, pause, rotate collateral

- Position sizing rules: cap exposure when uncertainty rises

- Escalate if privileged control is involved

4. Document (audit trail)

- Store alert context, evidence, decision, and outcome

- Track false positives and missed events

- Update thresholds and features

The goal isn’t “perfect prediction.” It’s measurable reduction in loss severity and faster response with fewer blind spots.

What models work best for DeFi anomaly detection?

Most teams start with a layered approach:

- Isolation Forest, robust z-score ensembles

- Autoencoders on feature vectors

- Density models (watch out for drift)

- Train labels like oracle_attack, liquidity_rug, governance_risk_spike

- Use calibrated probabilities, not raw scores

- Build a graph of assets, pools, wallets, and protocols

- Detect “stress propagation” using flow anomalies and centrality shifts

A practical “ensemble” decision is:

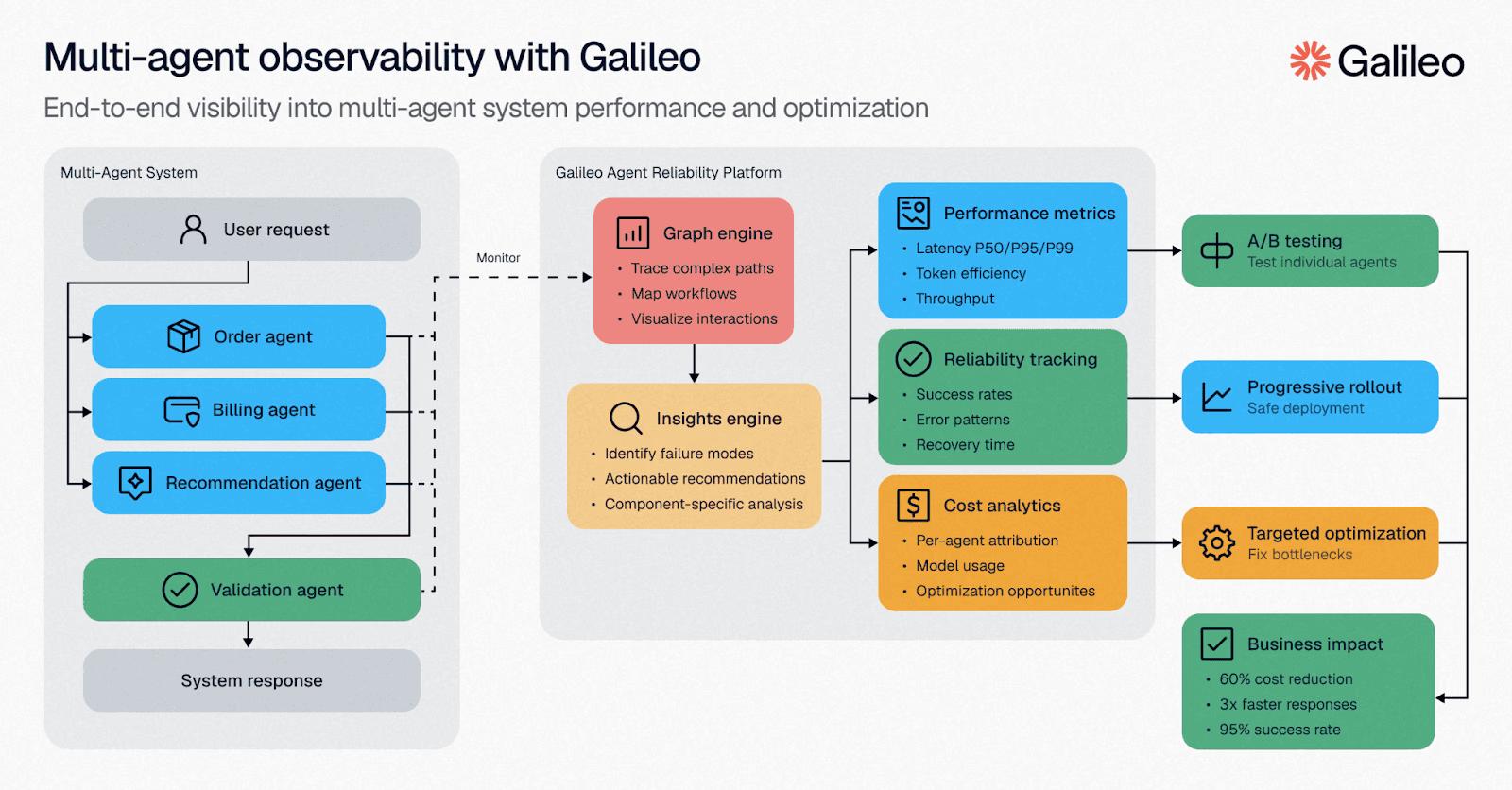

Multi-Agent Systems and LLMs: From Alerts to Explainable Analysis

LLMs are powerful in DeFi monitoring when they’re used correctly: as analysts that produce structured reasoning and retrieve evidence, not as ungrounded predictors.

A useful agent team looks like this:

This is where SimianX AI fits naturally: it’s designed for repeatable analysis workflows and multi-agent research loops, so teams can turn scattered on-chain evidence into explainable decisions. For related practical guides, see:

Guardrails that matter (non-negotiable)

json-like schemas for decisions)

Evaluation: How to Know Your Monitoring Works (Before You Need It)

Many monitoring systems fail because they’re judged on the wrong metric. “Accuracy” is not the target. Use operational metrics:

Key evaluation metrics

0.7 risk score mean ~70% of similar cases had losses?

Backtesting without fooling yourself

- New incentives

- New pools/markets

- New chains

- Contract upgrades

Stress tests you can run today

!Monitoring evaluation: lead time, precision, calibration, alert fatigue.png?width=816&height=527&name=How%20Our%20Machine%20Learning%20Predicts%20Fatigue%20Graphic-%20816x527%20px%20(2).png)

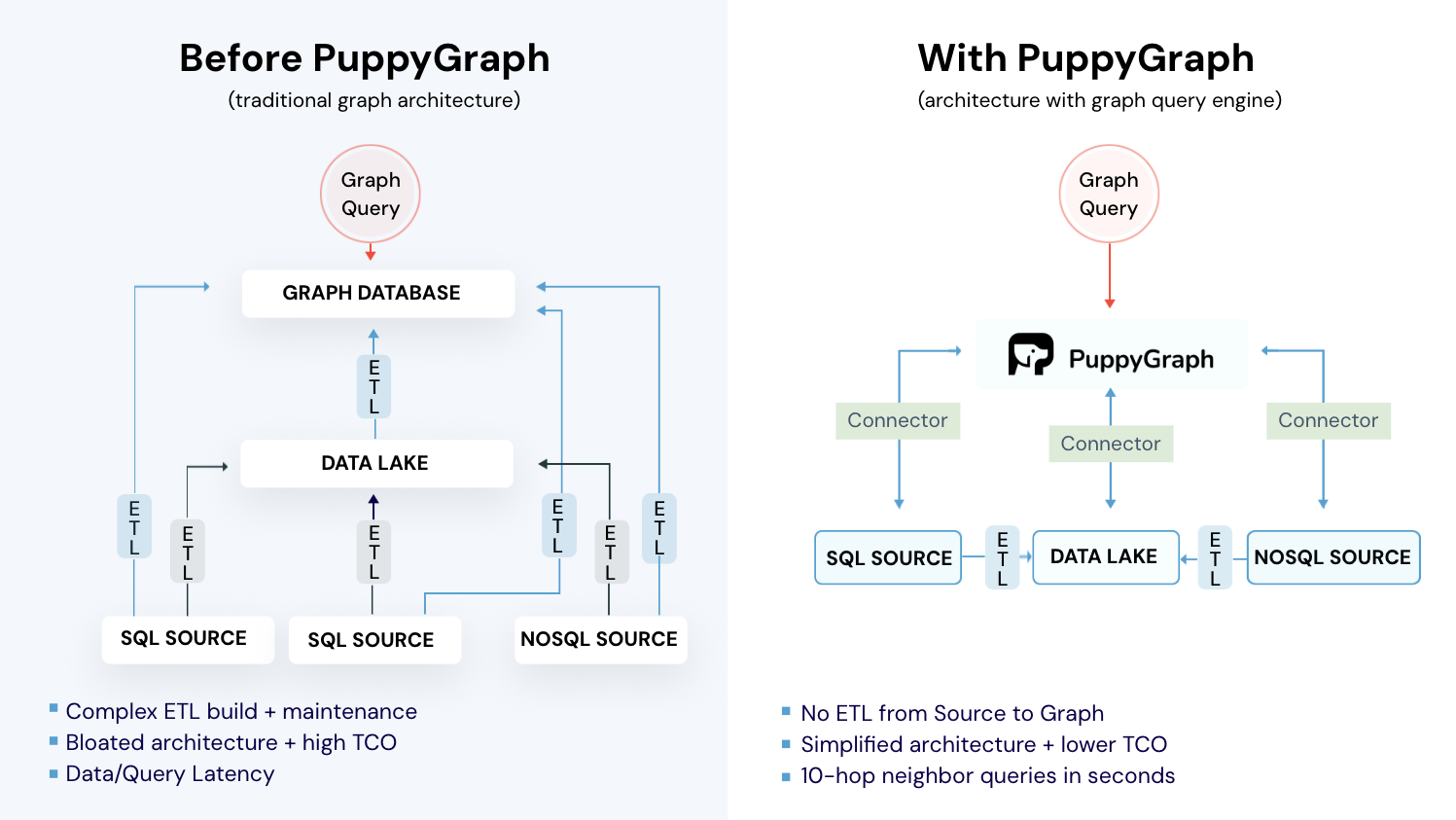

Monitoring Architecture: From Streaming Data to Actionable Alerts

A robust system looks like a production service, not a notebook.

| Component | What It Does | Practical Tip |

|---|---|---|

| Indexer / ETL | Pulls logs, traces, state | Use reorg-safe indexing and retries |

| Event bus | Streams events (swap, admin_change) | Keep schema versioned |

| Feature store | Computes rolling metrics | Store windowed features (5m, 1h, 7d) |

| Model service | Scores risk in real time | Version models + thresholds |

| Alert engine | Routes alerts to channels | Add dedupe + suppression rules |

| Dashboard | Visual context for triage | Show “why” (top signals) |

| Playbooks | Predefined actions | Tie actions to risk budget |

| Audit log | Evidence + decisions | Essential for improving system |

A simple alert policy (example)

Use rate limits and cooldowns so one noisy pool doesn’t spam you.

Operational Playbooks: Mitigation Actions That Actually Work

Detection without action is just entertainment. Build mitigation playbooks around position sizing, exposure limits, and contagion containment.

Mitigation menu (choose based on your mandate)

A lightweight “risk budget” rule:

- cap size when slippage_$100k exceeds threshold

- reduce size when utilization rises and liquidation volume accelerates

Analyst checklist for every high-severity alert

Practical Example: Monitoring a Lending Protocol + DEX Pool

Let’s walk through a realistic scenario.

Scenario A: Lending protocol liquidation cascade risk

Signals that typically precede cascades:

utilization climbs steadily (borrow demand outpaces supply)

Mitigation workflow:

1. Flag rising utilization + HF clustering as “pre-stress”

2. If oracle deviation crosses threshold, raise severity

3. Reduce exposure or hedge

4. If liquidations accelerate, exit or rotate collateral to reduce correlation

Scenario B: DEX pool liquidity rug / sudden depth collapse

Early-warning signals:

Mitigation workflow:

1. Trigger alert on LP outflow anomaly + slippage jump

2. Confirm whether withdrawals are organic (market stress) or targeted (rug behavior)

3. Reduce position size, avoid adding liquidity, widen risk buffers

4. If admin activity coincides, escalate severity immediately

Build vs Buy: Tooling Options (and Where SimianX AI Fits)

You can build this stack yourself—many teams do. The hard parts are:

SimianX AI can accelerate the “analysis layer” by helping you structure research workflows, automate evidence gathering, and standardize how monitoring insights become decisions. If your goal is to move from ad-hoc dashboards to a repeatable risk process, start with SimianX AI and adapt the workflows to your mandate (LP, lending, treasury, or trading).

FAQ About AI monitoring for DeFi risk mitigation

How to monitor DeFi protocols with AI without getting false positives?

Use an ensemble approach: combine simple heuristics (oracle staleness, admin changes) with anomaly models, then require corroboration from at least two independent signals. Add alert deduplication, cooldowns, and severity tiers so analysts only see what matters.

What is DeFi risk scoring, and can it be trusted?

DeFi risk scoring is a structured way to summarize multiple risk signals into a comparable scale (e.g., 0–100 or low/medium/high). It’s trustworthy only when it’s explainable (which signals drove the score) and calibrated against historical outcomes like drawdowns, liquidations, or exploit events.

Best way to track stablecoin depeg risk using on-chain data?

Monitor liquidity depth on major pools, peg deviation vs reference markets, and large holder flows to bridges/exchanges. Depeg risk often rises when liquidity thins and large holders reposition—especially during broader volatility spikes.

Can LLMs predict DeFi exploits before they happen?

LLMs shouldn’t be treated as predictors. They’re best used to summarize evidence, interpret transaction intent, and standardize incident reports—while deterministic rules and quantitative models handle detection and action thresholds.

How do I size positions using AI-driven DeFi monitoring?

Tie sizing to liquidity and stress indicators: reduce size as slippage increases, utilization rises, and correlation spikes. Treat the monitoring score as a “risk multiplier” on your base size rather than a binary trade signal.

Conclusion

AI-driven monitoring turns DeFi risk management from reactive firefighting into an operational system: real-time signals, interpretable alerts, and disciplined mitigation playbooks. The strongest results come from layering heuristics with anomaly detection, adding graph-based contagion views, and keeping humans in the loop with clear audit trails. If you want a repeatable workflow to monitor protocols, diagnose alerts with evidence, and act consistently, explore SimianX AI and build your monitoring process around a framework you can measure, stress-test, and improve.